kubeadm离线部署K8S

安装说明

最近在极客时间订阅了几个课程,其中丁奇老师的《MySQL实战45讲》和张磊老师的《深入剖析Kubernetes》写的非常好,通俗易懂,强烈推荐!

kubeadm是官方社区推出的一个用于快速部署kubernetes集群的工具。这个工具能通过一两条指令快速完成一个kubernetes集群的部署。

kubernetes官方网站: https://kubernetes.io

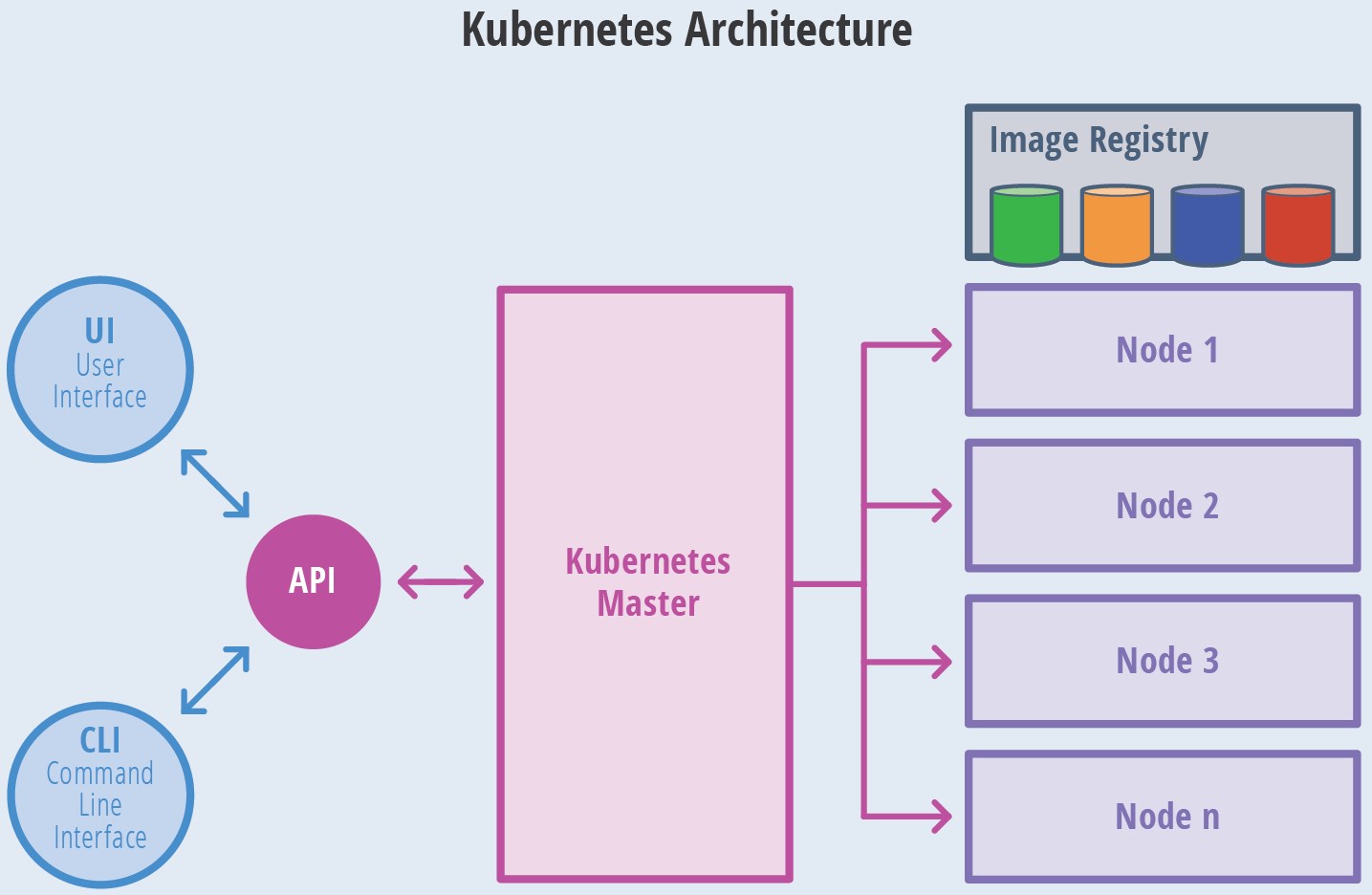

Kubernetes项目架构图:

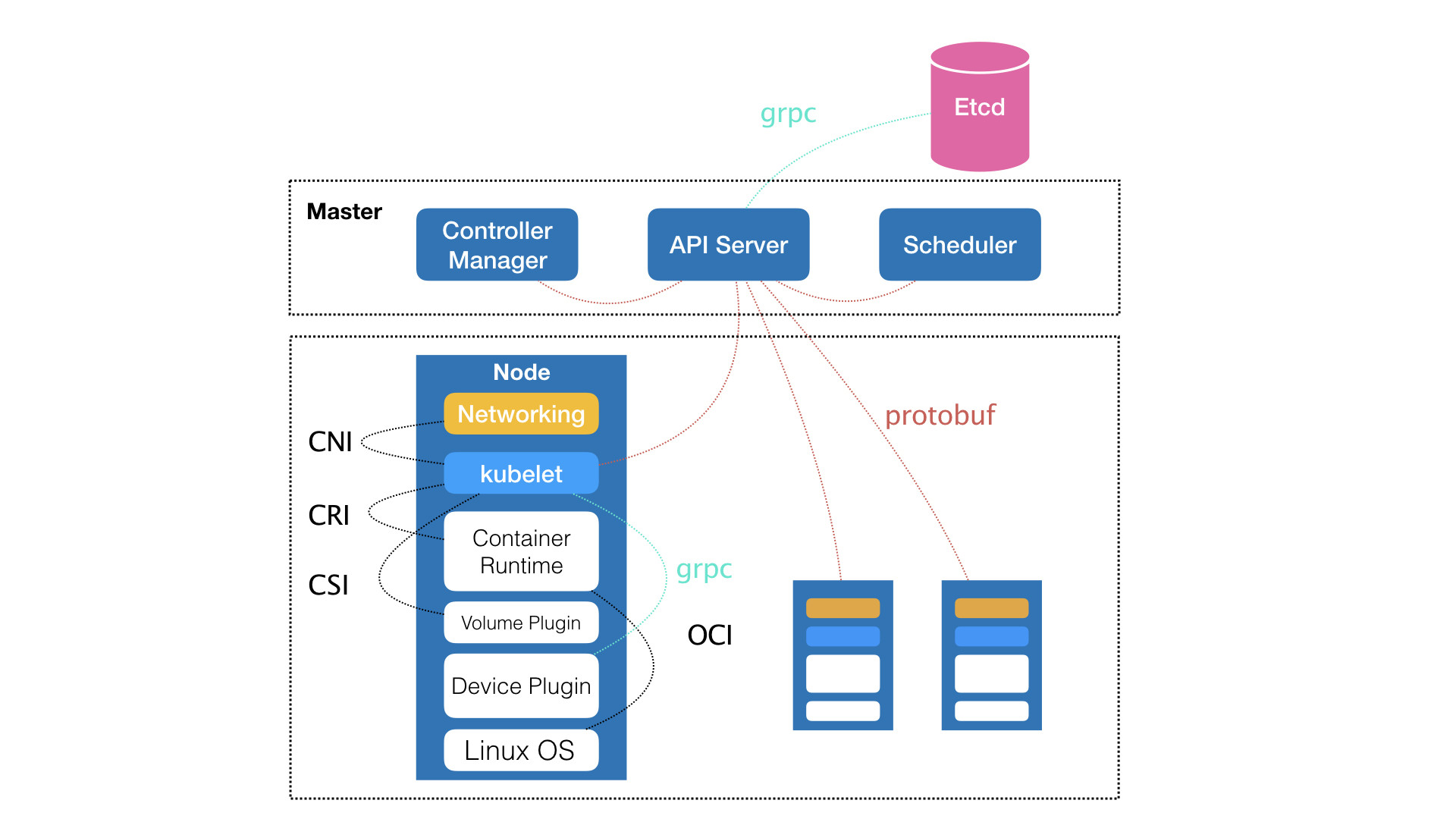

Kubernetes节点架构图:

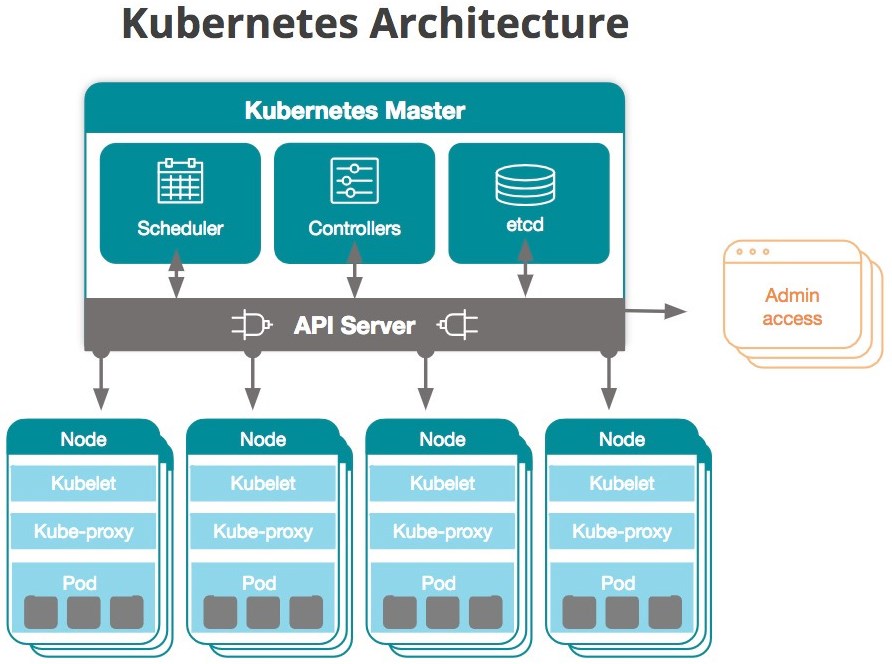

kubernetes组件架构图:

安装环境

1>. 使用kubeadm部署3个节点的 Kubernetes Cluster,节点信息:

主机名 IP OS 节点类型 配置

k8s-master 10.100.2.20 CentOS7.9 master 2C_3G

k8s-node1 10.100.2.21 CentOS7.9 node 2C_3G

k8s-node2 10.100.2.22 CentOS7.9 node 2C_3G注:为后续安装rook存储插件,每台虚拟机还需要单独挂载一块未分区的盘(sdb)。

2>. 操作系统配置

注:没有特殊说明的步骤需要在三台机器上都要执行安装操作

关闭防火墙和selinux

# systemctl disable --now firewalld

# setenforce 0 && sed -i 's/^SELINUX=enforcing$/SELINUX=disabled/' /etc/selinux/config

关闭Swap

# swapoff -a && sed -i '/swap/s/^/#/' /etc/fstab

设置主机名和hosts文件

# hostnamectl set-hostname k8s-master

# hostnamectl set-hostname k8s-node1

# hostnamectl set-hostname k8s-node2

# cat >> /etc/hosts << EOF

10.100.2.20 k8s-master

10.100.2.21 k8s-node1

10.100.2.22 k8s-node2

EOF

master节点执行免密登录

# ssh-keygen -t rsa

# ssh-copy-id -i /root/.ssh/id_rsa.pub root@10.100.2.21

# ssh-copy-id -i /root/.ssh/id_rsa.pub root@10.100.2.22

# ssh 10.100.2.21

# ssh 10.100.2.22

修改sysctl.conf

# cat > /etc/sysctl.d/k8s.conf << EOF

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

# sysctl -p

配置时间同步

# yum install -y chrony

# systemctl start chronyd

# vim /etc/chrony.conf

server 0.centos.pool.ntp.org iburst # 配置时钟源

# chronyc -a makestep # 立即同步安装kubernetes和docker

1>. 在线安装

# vim /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

# wget -O /etc/yum.repos.d/docker-ce.repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

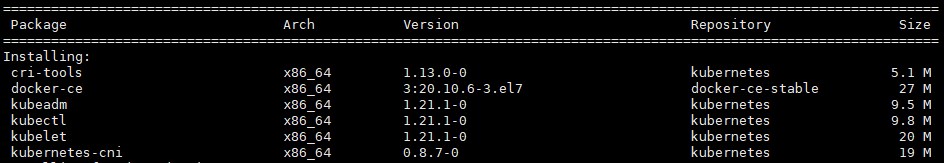

# yum install docker-ce kubelet kubeadm kubectl kubernetes-cni cri-tools

2>. 离线安装

找台可以上外网的机器,下载好RPM包:

查看docker和kubeadm所有版本:

# yum list docker-ce --showduplicates # 可下载安装指定的版本

# yum list kubeadm --showduplicates

下载:

# yum install --downloadonly --downloaddir /root/k8s docker-ce kubelet kubeadm kubectl kubernetes-cni cri-tools

拷贝下载的RPM包到主机安装。

注:其实只需要拷贝docker-ce kubelet kubeadm kubectl kubernetes-cni cri-tools这6个RPM包即可,只有这6个包是使用了docker-ce.repo和kubernetes.repo源。

其他包使用centos官方源即可。

# yum install *.rpm3>. 启动docker

# systemctl enable docker --now

注意:在初始化kubeadm之前,需要先修改/etc/docker/daemon.json

# vim /etc/docker/daemon.json

{

"registry-mirrors": ["https://45usbu2w.mirror.aliyuncs.com"], # 测试使用aliyun下载,结果失败,可删除。

"exec-opts": ["native.cgroupdriver=systemd"]

}

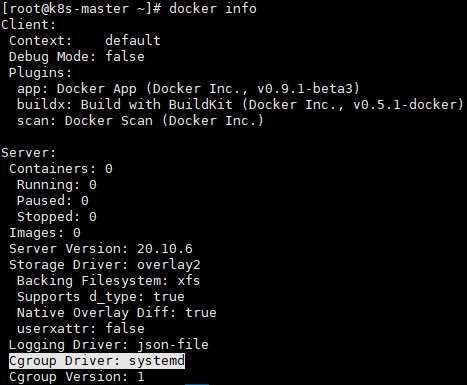

修改完成后需要重启docker,并检查docker info。

# systemctl restart docker

# docker info

Cgroup Driver: systemd # 修改之前为 Cgroup Driver: cgroupfs

否则初始化时会提示以下告警:

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/4>. 初始化kubeadm

# systemctl enable kubelet #允许开机启动,此时启动会报错。执行kubeadm命令,会生成一些配置文件后,这时才会让kubelet启动。

# kubelet --version

Kubernetes v1.21.1注:kubeadmi init仅在master节点执行,查看kubeadm.yaml版本信息:

# kubeadm config print init-defaults

apiVersion: kubeadmin.k8s.io/v1beta2

创建kubeadm使用的yaml文件:

# vim kubeadm.yaml

apiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterConfiguration

controllerManager:

ExtraArgs:

horizontal-pod-autoscaler-use-rest-clients: "true"

horizontal-pod-autoscaler-sync-period: "10s"

node-monitor-grace-period: "10s"

apiServer:

ExtraArgs:

runtime-config: "api/all=true"

kubernetesVersion: "v1.21.1"

imageRepository: registry.aliyuncs.com/google_containers # 可以指定国内的源,测试阿里云镜像仓库不可用,可删除。

其中,horizontal-pod-autoscaler-use-rest-clients: "true" 表示能够使用自定义资源(Custom Metrics)进行自动水平扩展。

kubernetesVersion: "v1.21.1"表示k8s的版本号,也可以写成:"stable-1.21",表示Kubernetes release 1.21 最新的稳定版,即v1.21.1

也可以使用命令导出配置文件,然后进行修改:

# kubeadm config print init-defaults --kubeconfig ClusterConfiguration > kubeadm.yaml在线安装:

# kubeadm init --config kubeadm.yaml

注:安装失败或是想修改配置可以使用 kubeadm reset 命令重置配置,再做初始化操作即可。重置后需要将$HOME/.kube文件删除。

也可以不使用配置文件,默认安装:

# kubeadm init离线安装:

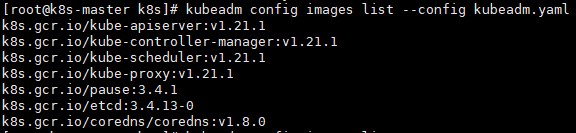

拉取镜像,查看所需镜像列表:

# kubeadm config images list --config kubeadm.yaml # --config kubeadm.yaml省略表示从官方拉取镜像

在能连接k8s.gcr.io的服务器上拉取镜像(需要科学上网):

或者使用国内可用的镜像仓库拉取,然后docker tag重新打标签。测试的时候不想去找就直接使用Vultr的vps拉取的镜像。

# kubeadm config images pull --config kubeadm.yaml

或者使用docker pull逐个拉取

# docker pull k8s.gcr.io/kube-apiserver:v1.21.1

# docker pull k8s.gcr.io/kube-controller-manager:v1.21.1

# docker pull k8s.gcr.io/kube-scheduler:v1.21.1

# docker pull k8s.gcr.io/kube-proxy:v1.21.1

# docker pull k8s.gcr.io/pause:3.4.1

# docker pull k8s.gcr.io/etcd:3.4.13-0

# docker pull k8s.gcr.io/coredns/coredns:v1.8.0

打包镜像:

# docker image save k8s.gcr.io/kube-apiserver > kube-apiserver.tar

# docker image save k8s.gcr.io/kube-proxy > kube-proxy.tar

# docker image save k8s.gcr.io/kube-controller-manager > kube-controller-manager.tar

# docker image save k8s.gcr.io/kube-scheduler > kube-scheduler.tar

# docker image save k8s.gcr.io/pause > pause.tar

# docker image save k8s.gcr.io/coredns/coredns > coredns.tar

# docker image save k8s.gcr.io/etcd > etcd.tar

拷贝打包的tar镜像到三台测试虚拟机上导入,导入镜像:

# docker image load < kube-apiserver.tar

# docker image load < kube-proxy.tar

# docker image load < kube-controller-manager.tar

# docker image load < kube-scheduler.tar

# docker image load < pause.tar

# docker image load < coredns.tar

# docker image load < etcd.tar

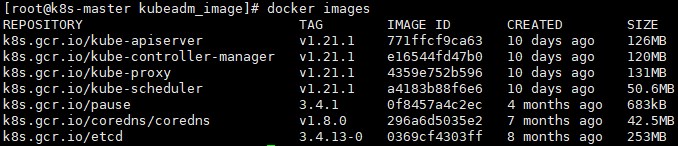

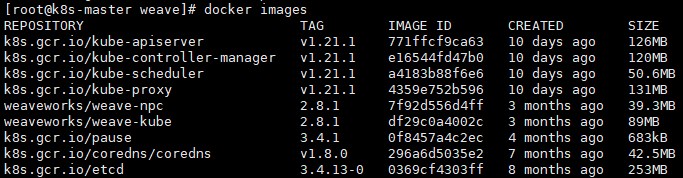

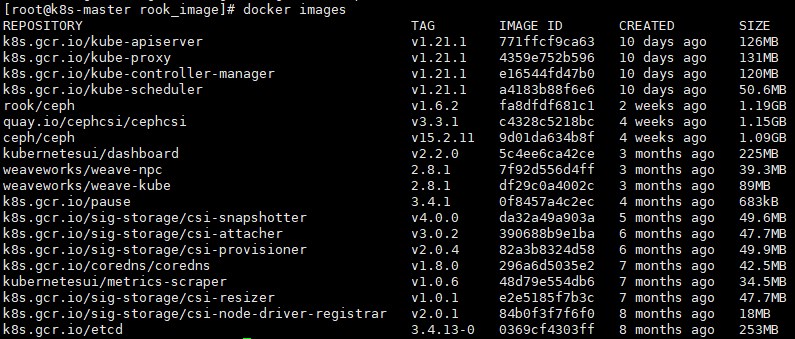

查看镜像:

# docker images

初始化master节点:

# kubeadm init --config kubeadm.yaml

[init] Using Kubernetes version: v1.21.1

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 10.100.2.20]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master localhost] and IPs [10.100.2.20 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master localhost] and IPs [10.100.2.20 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 20.505630 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.21" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node k8s-master as control-plane by adding the labels: [node-role.kubernetes.io/master(deprecated) node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node k8s-master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: vt4tz8.zyjl5drriyui1qc4

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.100.2.20:6443 --token vt4tz8.zyjl5drriyui1qc4 \

--discovery-token-ca-cert-hash sha256:6de6d52ef951f0d1cdeee259f6a3e9a375ca32bc4c61d5d49ec628075fbc5b59

按照信息提示,执行命令:

# mkdir -p $HOME/.kube

# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

# chown $(id -u):$(id -g) $HOME/.kube/config

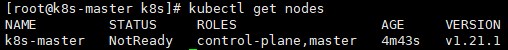

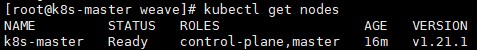

查看节点信息,节点状态为 NotReady:

# kubectl get nodes # nodes可简写为no由于未安装网络插件,所以此时状态是NotReady

安装weave网络插件(master)

注:推荐安装weave,也可选择安装flannel。

在线安装:

# kubectl apply -f "https://cloud.weave.works/k8s/net?k8s-version=$(kubectl version | base64 | tr -d '\n')"

serviceaccount/weave-net created

clusterrole.rbac.authorization.k8s.io/weave-net created

clusterrolebinding.rbac.authorization.k8s.io/weave-net created

role.rbac.authorization.k8s.io/weave-net created

rolebinding.rbac.authorization.k8s.io/weave-net created

daemonset.apps/weave-net created

# docker images

离线安装:

下载配置文件:

# curl -fsSLo weave-daemonset.yaml "https://cloud.weave.works/k8s/net?k8s-version=$(kubectl version | base64 | tr -d '\n')"

拉取镜像:

# cat weave-daemonset.yaml | grep image

image: 'docker.io/weaveworks/weave-kube:2.8.1'

image: 'docker.io/weaveworks/weave-npc:2.8.1'

# docker pull docker.io/weaveworks/weave-kube:2.8.1

# docker pull docker.io/weaveworks/weave-npc:2.8.1

打包镜像:

# docker image save weaveworks/weave-kube > weave-kube.tar

# docker image save weaveworks/weave-npc > weave-npc.tar

上传到主机上导入(三个节点都需要导入)

导入镜像:

# docker image load < weave-kube.tar

# docker image load < weave-npc.tar

安装插件:(仅master节点)

# kubectl apply -f weave-daemonset.yaml网络插件安装完成,可以看到k8s-master的状态为Ready了。

5>. 加入node节点

分别在两台node节点上执行:

# kubeadm join 10.100.2.20:6443 --token vt4tz8.zyjl5drriyui1qc4 \

> --discovery-token-ca-cert-hash sha256:6de6d52ef951f0d1cdeee259f6a3e9a375ca32bc4c61d5d49ec628075fbc5b59

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

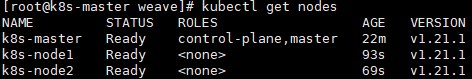

此时再查看node节点,就有三个了,状态全部为Ready

# kubectl get nodes

注:

1>. 如果提示:[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd".

则需要修改/etc/docker/daemon.json

2>. 如果提示:[WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service'

则需要先执行systemctl enable kubelet

否则会提示:[kubelet-check] It seems like the kubelet isn‘t running or healthy.

3>. 如果提示:[ERROR FileAvailable--etc-kubernetes-kubelet.conf]: /etc/kubernetes/kubelet.conf already exists

则表示之前已经加入过一次(即前面加入时报错),初始化:# kubeadm reset,输入y,执行处理。然后再重新加入。

4>. 如果执行kubeadm init时没有记录下加入集群的命令,可以通过以下命令重新创建

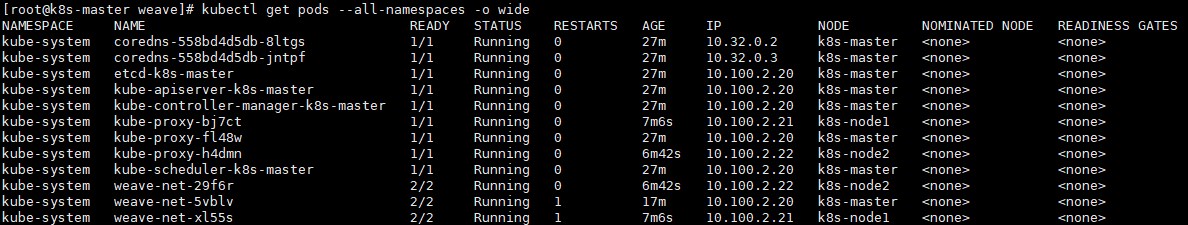

# kubeadm token create --print-join-command查看所有的Pods:

# kubectl get pods --all-namespaces

此时master和node上运行的进程:

master:

# ps -ef |grep kube

root 9819 9791 1 00:54 ? 00:00:26 etcd --advertise-client-urls=https://10.100.2.20:2379 --cert-file=/etc/kubernetes/pki/etcd/server.crt --client-cert-auth=true --data-dir=/var/lib/etcd --initial-advertise-peer-urls=https://10.100.2.20:2380 --initial-cluster=k8s-master=https://10.100.2.20:2380 --key-file=/etc/kubernetes/pki/etcd/server.key --listen-client-urls=https://127.0.0.1:2379,https://10.100.2.20:2379 --listen-metrics-urls=http://127.0.0.1:2381 --listen-peer-urls=https://10.100.2.20:2380 --name=k8s-master --peer-cert-file=/etc/kubernetes/pki/etcd/peer.crt --peer-client-cert-auth=true --peer-key-file=/etc/kubernetes/pki/etcd/peer.key --peer-trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt --snapshot-count=10000 --trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt

root 9927 9835 2 00:54 ? 00:00:36 kube-controller-manager --authentication-kubeconfig=/etc/kubernetes/controller-manager.conf --authorization-kubeconfig=/etc/kubernetes/controller-manager.conf --bind-address=127.0.0.1 --client-ca-file=/etc/kubernetes/pki/ca.crt --cluster-name=kubernetes --cluster-signing-cert-file=/etc/kubernetes/pki/ca.crt --cluster-signing-key-file=/etc/kubernetes/pki/ca.key --controllers=*,bootstrapsigner,tokencleaner --kubeconfig=/etc/kubernetes/controller-manager.conf --leader-elect=true --port=0 --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt --root-ca-file=/etc/kubernetes/pki/ca.crt --service-account-private-key-file=/etc/kubernetes/pki/sa.key --use-service-account-credentials=true

root 9943 9872 0 00:54 ? 00:00:06 kube-scheduler --authentication-kubeconfig=/etc/kubernetes/scheduler.conf --authorization-kubeconfig=/etc/kubernetes/scheduler.conf --bind-address=127.0.0.1 --kubeconfig=/etc/kubernetes/scheduler.conf --leader-elect=true --port=0

root 9955 9887 6 00:54 ? 00:01:46 kube-apiserver --advertise-address=10.100.2.20 --allow-privileged=true --authorization-mode=Node,RBAC --client-ca-file=/etc/kubernetes/pki/ca.crt --enable-admission-plugins=NodeRestriction --enable-bootstrap-token-auth=true --etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt --etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt --etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client.key --etcd-servers=https://127.0.0.1:2379 --insecure-port=0 --kubelet-client-certificate=/etc/kubernetes/pki/apiserver-kubelet-client.crt --kubelet-client-key=/etc/kubernetes/pki/apiserver-kubelet-client.key --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.crt --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client.key --requestheader-allowed-names=front-proxy-client --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt --requestheader-extra-headers-prefix=X-Remote-Extra- --requestheader-group-headers=X-Remote-Group --requestheader-username-headers=X-Remote-User --secure-port=6443 --service-account-issuer=https://kubernetes.default.svc.cluster.local --service-account-key-file=/etc/kubernetes/pki/sa.pub --service-account-signing-key-file=/etc/kubernetes/pki/sa.key --service-cluster-ip-range=10.96.0.0/12 --tls-cert-file=/etc/kubernetes/pki/apiserver.crt --tls-private-key-file=/etc/kubernetes/pki/apiserver.key

root 10351 1 3 00:54 ? 00:00:54 /usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf --config=/var/lib/kubelet/config.yaml --network-plugin=cni --pod-infra-container-image=k8s.gcr.io/pause:3.4.1

root 10631 10610 0 00:54 ? 00:00:00 /usr/local/bin/kube-proxy --config=/var/lib/kube-proxy/config.conf --hostname-override=k8s-master

root 14997 14752 0 01:06 ? 00:00:00 /home/weave/kube-utils -run-reclaim-daemon -node-name=k8s-master -peer-name=aa:ac:65:a3:27:61 -log-level=debug

root 22230 8332 0 01:23 pts/0 00:00:00 grep --color=auto kube

node:

# ps -ef|grep kube

root 9014 1 1 01:14 ? 00:00:10 /usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf --config=/var/lib/kubelet/config.yaml --network-plugin=cni --pod-infra-container-image=k8s.gcr.io/pause:3.4.1

root 9439 9417 0 01:15 ? 00:00:00 /usr/local/bin/kube-proxy --config=/var/lib/kube-proxy/config.conf --hostname-override=k8s-node1

root 10206 9991 0 01:15 ? 00:00:00 /home/weave/kube-utils -run-reclaim-daemon -node-name=k8s-node1 -peer-name=b6:80:2b:0a:95:84 -log-level=debug

root 12838 8325 0 01:23 pts/0 00:00:00 grep --color=auto kube允许master节点运行Pod

master节点默认打了taints

# kubectl describe nodes|grep Taints

Taints: node-role.kubernetes.io/master:NoSchedule # node1的值为 <none>

执行以下命令去掉taints污点

# kubectl taint nodes --all node-role.kubernetes.io/master-

node/master untainted

再次查看 taint字段为none

# kubectl describe nodes|grep Taints

Taints: <none>

如果要恢复Master Only状态,执行如下命令:

# kubectl taint node master node-role.kubernetes.io/master=:NoSchedule

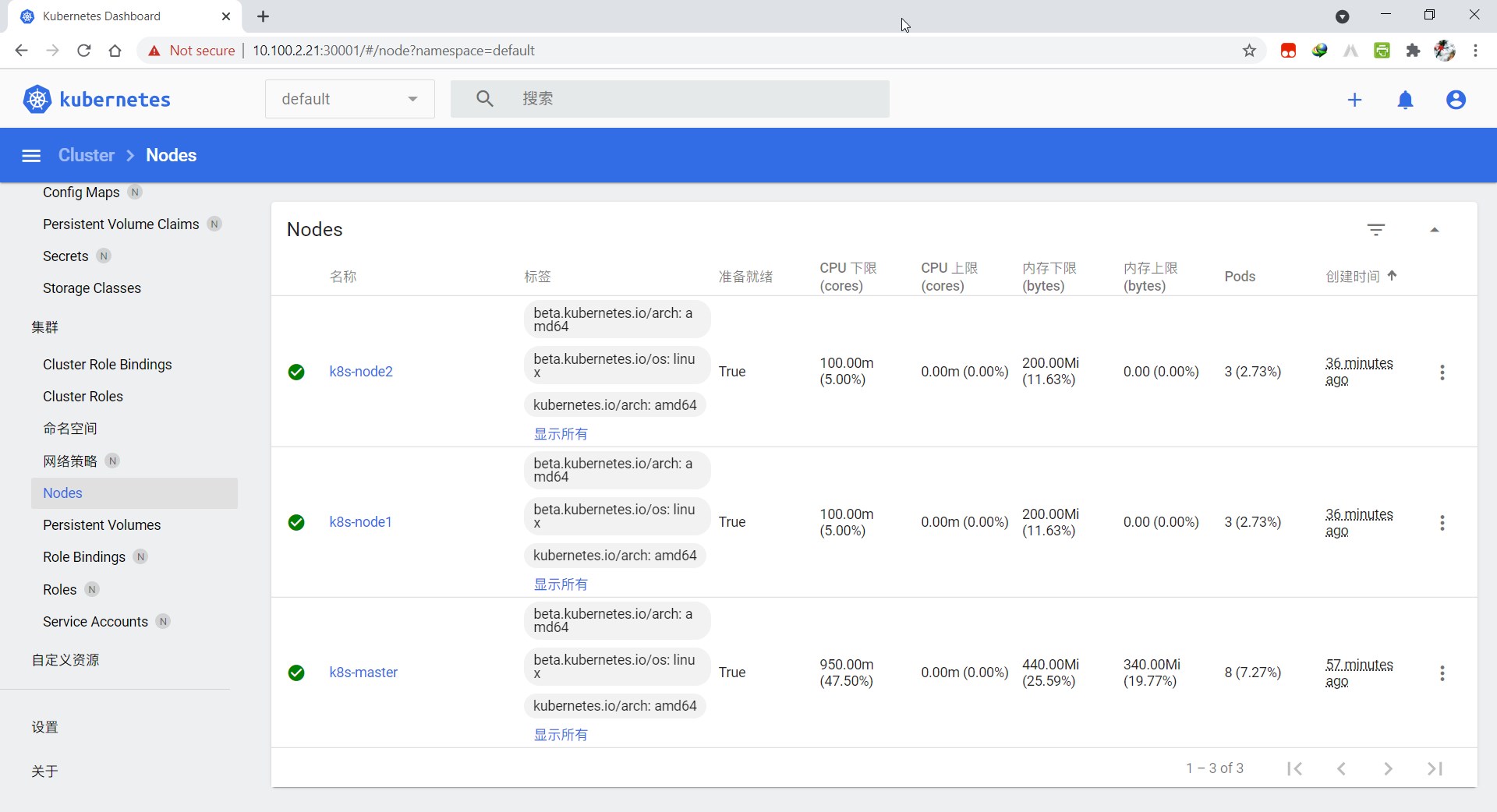

node/master tainted部署Dashboard

官方地址:https://github.com/kubernetes/dashboard

在线一键部署(需要科学上网):

# kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.2.0/aio/deploy/recommended.yaml

访问 Dashboard UI:

# kubectl proxy

浏览器输入:(默认只能集群内访问)

http://localhost:8001/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/离线安装:

同理需要科学上网拉取镜像:

# wget -O dashboard-recommended.yaml https://raw.githubusercontent.com/kubernetes/dashboard/v2.2.0/aio/deploy/recommended.yaml

# cat dashboard-recommended.yaml |grep image

image: kubernetesui/dashboard:v2.2.0

image: kubernetesui/metrics-scraper:v1.0.6

拉取镜像:

# docker pull kubernetesui/dashboard:v2.2.0

# docker pull kubernetesui/metrics-scraper:v1.0.6

# docker images|grep kubernetesui

kubernetesui/dashboard v2.2.0 5c4ee6ca42ce 2 months ago 225MB

kubernetesui/metrics-scraper v1.0.6 48d79e554db6 6 months ago 34.5MB

打包镜像:

# docker image save kubernetesui/dashboard > dashboard.tar

# docker image save kubernetesui/metrics-scraper > metrics-scraper.tar

拷贝打包的tar镜像到主机上导入(所有节点)

导入镜像:

# docker image load < dashboard.tar

# docker image load < metrics-scraper.tar 默认Dashboard只能集群内部访问,修改Service为NodePort类型,暴露到外部:

# vim dashboard-recommended.yaml

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

nodePort: 30001 # 增加端口

type: NodePort # 增加类型为nodeport

selector:

k8s-app: kubernetes-dashboard

kind: Deployment

...

spec:

...

imagePullPolicy: IfNotPresent # Always 改为 IfNotPresent安装:(master)

# kubectl apply -f dashboard-recommended.yaml # 修改配置文件后可重复执行

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

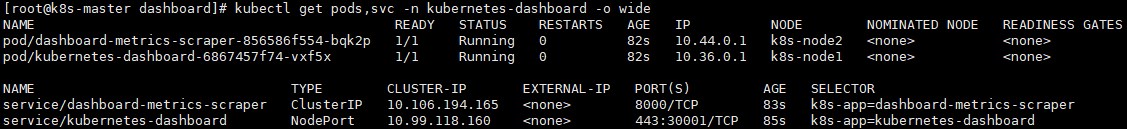

查看dashboard运行状态和IP地址端口

# kubectl get pods,svc -n kubernetes-dashboard -o wide

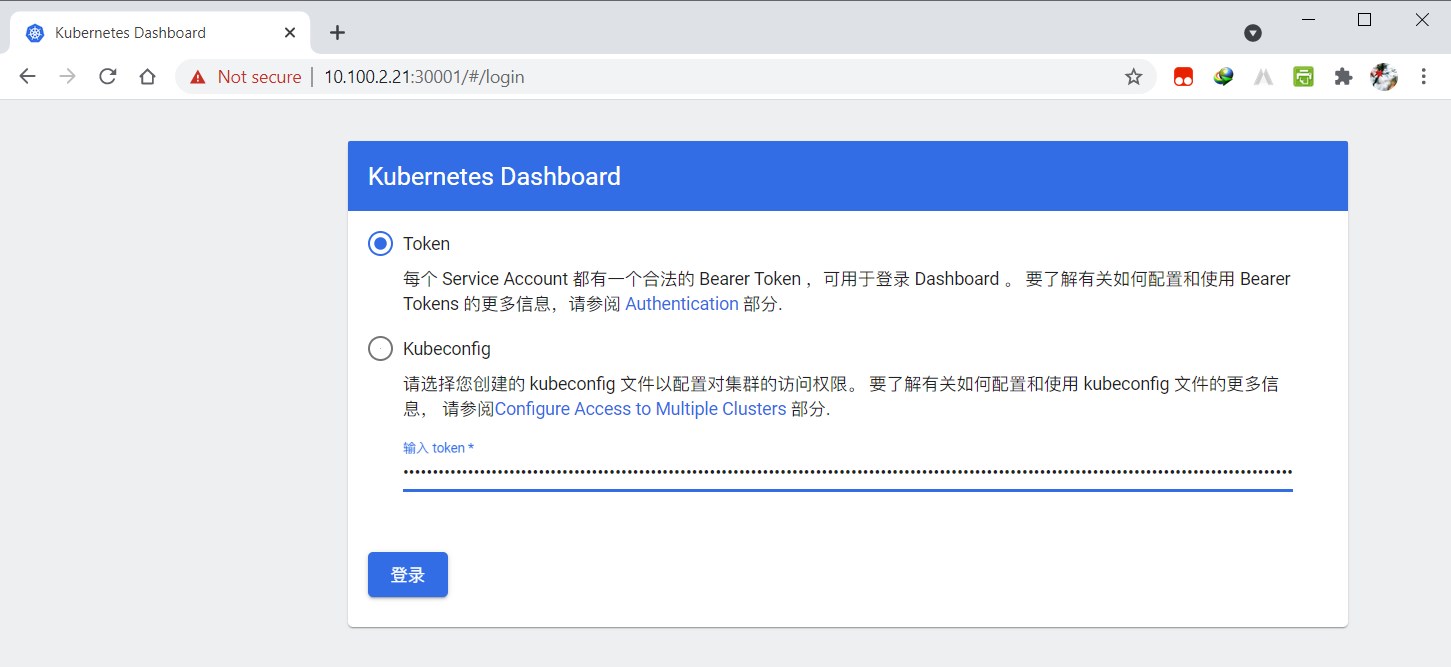

登录dashboard:

访问地址:https://node1:30001 # 任意一个nodeip均可

创建用户:

# kubectl create serviceaccount dashboard-admin -n kube-system

serviceaccount/dashboard-admin created

# kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

clusterrolebinding.rbac.authorization.k8s.io/dashboard-admin created

# kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

Name: dashboard-admin-token-pd62v

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: c16dfe18-d83b-4432-bef6-e439775437d0

Type: kubernetes.io/service-account-token

Data

====

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6ImRGR2Z2SXRkd2phN0ZpQmJtNThoNE1PLVNTbVJ3OXlsd24wdUpDbEdfYzQifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tcGQ2MnYiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiYzE2ZGZlMTgtZDgzYi00NDMyLWJlZjYtZTQzOTc3NTQzN2QwIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.iB_-WqUnAyHK3jZkzT2uktpFtbErwoV4ED6YTSQscJ4JCoTOq7QGzk23r1wbk8B6Xlpu0VqTOzBpjfGXk_G0LBrLHlQisfh7QHtVtUqA747TeS1aMqKRoPbUo39fD5vHkNzQRpjI8Z4vL2iTu0eEoQ0mISKB2fxcRWjbnwI8XIR3xUxIhmK0IwjYUcE7VZdmfC1mYBZgRgZ7QsrzIZ9XvgW58EM2t-yHc5VAi281OaSHWjaIZLlNi6xIfEODB8r83toFpOkRrO5VVg1vovdBHWoaaPCZulvwWIbj92fBd05h53QRtVLoYXaNXqEDSw87EgkI45JiFOqLzE7faPhFbw

ca.crt: 1066 bytes

输出信息中,包含 "token: xxxx",复制token,用于登录。

使用浏览器,打开登录地地址,https://10.100.2.21:30001(任意nodeip),在仪表盘,选择"Token",粘贴上一步复制的token,点击登录。

登录后提示告警:

configmaps is forbidden: User “system:anonymous” cannot list resource “configmaps” in API group “” in the namespace “default”

解决办法:

给匿名用户授权即可解决,测试环境可用此快速解决

# kubectl create clusterrolebinding test:anonymous --clusterrole=cluster-admin --user=system:anonymous

clusterrolebinding.rbac.authorization.k8s.io/test:anonymous created

注:删除用户命令:

# kubectl delete serviceaccount dashboard-admin -n kube-system

# kubectl delete clusterrolebinding dashboard-admin

部署容器存储插件Rook

Rook 项目是一个基于 Ceph 的 Kubernetes 存储插件。不同于对 Ceph 的简单封装,Rook 在自己的实现中加入了水平扩展、迁移、灾难备份、监控等大量的企业级功能,使得这个项目变成了一个完整的、生产级别可用的容器存储插件。

得益于容器化技术,用几条指令,Rook 就可以把复杂的 Ceph 存储后端部署起来:

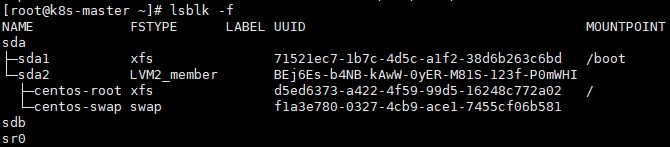

1>. 所有节点需要有1块未使用的磁盘,用作OSD盘提供存储空间(在集群中至少有三个节点可用,满足ceph高可用要求,这里已配置master节点使其支持运行pod)

# lsblk -f

注:为了配置 Ceph 存储集群,至少需要以下本地存储选项之一:

<1>. 原始设备(无分区或格式化的文件系统)

<2>. 原始分区(无格式文件系统)

<3>. 可通过 block 模式从存储类别获得 PV2>. 安装rook ceph

克隆rook github仓库到本地:

# git clone --single-branch --branch v1.6.2 https://github.com/rook/rook.git

# cd rook/cluster/examples/kubernetes/ceph/在线拉取镜像:

修改Rook CSI镜像地址,原本的地址可能是gcr的镜像,但是gcr的镜像无法被国内访问,所以需要同步gcr的镜像到阿里云镜像仓库,可以直接修改如下:

# vim operator.yaml

72 # ROOK_CSI_CEPH_IMAGE: "quay.io/cephcsi/cephcsi:v3.3.1"

73 # ROOK_CSI_REGISTRAR_IMAGE: "k8s.gcr.io/sig-storage/csi-node-driver-registrar:v2.0.1"

74 # ROOK_CSI_RESIZER_IMAGE: "k8s.gcr.io/sig-storage/csi-resizer:v1.0.1"

75 # ROOK_CSI_PROVISIONER_IMAGE: "k8s.gcr.io/sig-storage/csi-provisioner:v2.0.4"

76 # ROOK_CSI_SNAPSHOTTER_IMAGE: "k8s.gcr.io/sig-storage/csi-snapshotter:v4.0.0"

77 # ROOK_CSI_ATTACHER_IMAGE: "k8s.gcr.io/sig-storage/csi-attacher:v3.0.2"

改为:

ROOK_CSI_CEPH_IMAGE: "quay.io/cephcsi/cephcsi:v3.3.1"

ROOK_CSI_REGISTRAR_IMAGE: "registry.cn-beijing.aliyuncs.com/dotbalo/csi-node-driver-registrar:v2.0.1"

ROOK_CSI_RESIZER_IMAGE: "registry.cn-beijing.aliyuncs.com/dotbalo/csi-resizer:v1.0.1"

ROOK_CSI_PROVISIONER_IMAGE: "registry.cn-beijing.aliyuncs.com/dotbalo/csi-provisioner:v2.0.4"

ROOK_CSI_SNAPSHOTTER_IMAGE: "registry.cn-beijing.aliyuncs.com/dotbalo/csi-snapshotter:v4.0.0"

ROOK_CSI_ATTACHER_IMAGE: "registry.cn-beijing.aliyuncs.com/dotbalo/csi-attacher:v3.0.2"

新版本rook默认关闭了自动发现容器的部署,可以找到ROOK_ENABLE_DISCOVERY_DAEMON改成true即可:

ROOK_ENABLE_DISCOVERY_DAEMON: "true"

否则将不会部署rook-discover pod。离线下载镜像:

注:如果是内网环境,可以手动拉取镜像后拷贝上传到内网导入。

# cat operator.yaml |grep image

image: rook/ceph:v1.6.2

# cat cluster.yaml |grep image

image: ceph/ceph:v15.2.11

下载镜像:

# docker pull quay.io/cephcsi/cephcsi:v3.3.1

# docker pull k8s.gcr.io/sig-storage/csi-node-driver-registrar:v2.0.1

# docker pull k8s.gcr.io/sig-storage/csi-resizer:v1.0.1

# docker pull k8s.gcr.io/sig-storage/csi-provisioner:v2.0.4

# docker pull k8s.gcr.io/sig-storage/csi-snapshotter:v4.0.0

# docker pull k8s.gcr.io/sig-storage/csi-attacher:v3.0.2

# docker pull rook/ceph:v1.6.2

# docker pull ceph/ceph:v15.2.11 # cephcsi镜像是在此镜像基础上进行的封装。

# docker images

注:如果官网k8s.gcr.io无法连接,可以使用aliyun下载:

# docker pull registry.aliyuncs.com/it00021hot/csi-node-driver-registrar:v2.0.1

# docker pull registry.aliyuncs.com/it00021hot/csi-resizer:v1.0.1

# docker pull registry.aliyuncs.com/it00021hot/csi-provisioner:v2.0.4

# docker pull registry.aliyuncs.com/it00021hot/csi-snapshotter:v4.0.0

# docker pull registry.aliyuncs.com/it00021hot/csi-attacher:v3.0.2

修改标签:

# docker tag registry.aliyuncs.com/it00021hot/csi-node-driver-registrar:v2.0.1 k8s.gcr.io/sig-storage/csi-node-driver-registrar:v2.0.1

# docker tag registry.aliyuncs.com/it00021hot/csi-resizer:v1.0.1 k8s.gcr.io/sig-storage/csi-resizer:v1.0.1

# docker tag registry.aliyuncs.com/it00021hot/csi-provisioner:v2.0.4 k8s.gcr.io/sig-storage/csi-provisioner:v2.0.4

# docker tag registry.aliyuncs.com/it00021hot/csi-snapshotter:v4.0.0 k8s.gcr.io/sig-storage/csi-snapshotter:v4.0.0

# docker tag registry.aliyuncs.com/it00021hot/csi-attacher:v3.0.2 k8s.gcr.io/sig-storage/csi-attacher:v3.0.2

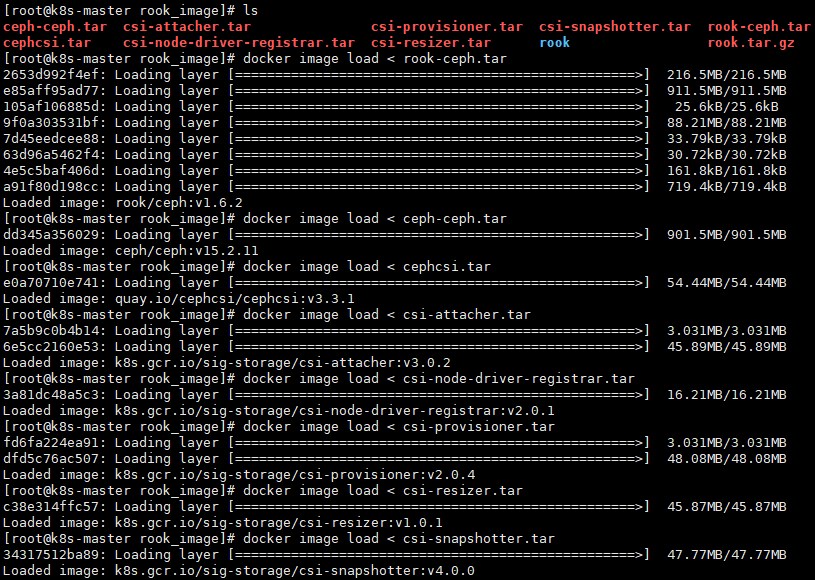

打包镜像:

# docker image save quay.io/cephcsi/cephcsi:v3.3.1 > cephcsi.tar

# docker image save k8s.gcr.io/sig-storage/csi-node-driver-registrar:v2.0.1 > csi-node-driver-registrar.tar

# docker image save k8s.gcr.io/sig-storage/csi-resizer:v1.0.1 > csi-resizer.tar

# docker image save k8s.gcr.io/sig-storage/csi-provisioner:v2.0.4 > csi-provisioner.tar

# docker image save k8s.gcr.io/sig-storage/csi-snapshotter:v4.0.0 > csi-snapshotter.tar

# docker image save k8s.gcr.io/sig-storage/csi-attacher:v3.0.2 > csi-attacher.tar

# docker image save rook/ceph:v1.6.2 > rook-ceph.tar

# docker image save ceph/ceph:v15.2.11 > ceph-ceph.tar

拷贝打包的tar镜像到主机上导入(所有主机)

部署rook插件:

执行yaml文件部署rook系统组件:

# kubectl create -f crds.yaml -f common.yaml

customresourcedefinition.apiextensions.k8s.io/cephblockpools.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephclients.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephclusters.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephfilesystemmirrors.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephfilesystems.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephnfses.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectrealms.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectstores.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectstoreusers.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectzonegroups.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectzones.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephrbdmirrors.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/objectbucketclaims.objectbucket.io created

customresourcedefinition.apiextensions.k8s.io/objectbuckets.objectbucket.io created

customresourcedefinition.apiextensions.k8s.io/volumereplicationclasses.replication.storage.openshift.io created

customresourcedefinition.apiextensions.k8s.io/volumereplications.replication.storage.openshift.io created

customresourcedefinition.apiextensions.k8s.io/volumes.rook.io created

namespace/rook-ceph created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-object-bucket created

serviceaccount/rook-ceph-admission-controller created

clusterrole.rbac.authorization.k8s.io/rook-ceph-admission-controller-role created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-admission-controller-rolebinding created

clusterrole.rbac.authorization.k8s.io/rook-ceph-cluster-mgmt created

role.rbac.authorization.k8s.io/rook-ceph-system created

clusterrole.rbac.authorization.k8s.io/rook-ceph-global created

clusterrole.rbac.authorization.k8s.io/rook-ceph-mgr-cluster created

clusterrole.rbac.authorization.k8s.io/rook-ceph-object-bucket created

serviceaccount/rook-ceph-system created

rolebinding.rbac.authorization.k8s.io/rook-ceph-system created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-global created

serviceaccount/rook-ceph-osd created

serviceaccount/rook-ceph-mgr created

serviceaccount/rook-ceph-cmd-reporter created

role.rbac.authorization.k8s.io/rook-ceph-osd created

clusterrole.rbac.authorization.k8s.io/rook-ceph-osd created

clusterrole.rbac.authorization.k8s.io/rook-ceph-mgr-system created

role.rbac.authorization.k8s.io/rook-ceph-mgr created

role.rbac.authorization.k8s.io/rook-ceph-cmd-reporter created

rolebinding.rbac.authorization.k8s.io/rook-ceph-cluster-mgmt created

rolebinding.rbac.authorization.k8s.io/rook-ceph-osd created

rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr created

rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr-system created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-mgr-cluster created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-osd created

rolebinding.rbac.authorization.k8s.io/rook-ceph-cmd-reporter created

Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

podsecuritypolicy.policy/00-rook-privileged created

clusterrole.rbac.authorization.k8s.io/psp:rook created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-system-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-default-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-osd-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-cmd-reporter-psp created

serviceaccount/rook-csi-cephfs-plugin-sa created

serviceaccount/rook-csi-cephfs-provisioner-sa created

role.rbac.authorization.k8s.io/cephfs-external-provisioner-cfg created

rolebinding.rbac.authorization.k8s.io/cephfs-csi-provisioner-role-cfg created

clusterrole.rbac.authorization.k8s.io/cephfs-csi-nodeplugin created

clusterrole.rbac.authorization.k8s.io/cephfs-external-provisioner-runner created

clusterrolebinding.rbac.authorization.k8s.io/rook-csi-cephfs-plugin-sa-psp created

clusterrolebinding.rbac.authorization.k8s.io/rook-csi-cephfs-provisioner-sa-psp created

clusterrolebinding.rbac.authorization.k8s.io/cephfs-csi-nodeplugin created

clusterrolebinding.rbac.authorization.k8s.io/cephfs-csi-provisioner-role created

serviceaccount/rook-csi-rbd-plugin-sa created

serviceaccount/rook-csi-rbd-provisioner-sa created

role.rbac.authorization.k8s.io/rbd-external-provisioner-cfg created

rolebinding.rbac.authorization.k8s.io/rbd-csi-provisioner-role-cfg created

clusterrole.rbac.authorization.k8s.io/rbd-csi-nodeplugin created

clusterrole.rbac.authorization.k8s.io/rbd-external-provisioner-runner created

clusterrolebinding.rbac.authorization.k8s.io/rook-csi-rbd-plugin-sa-psp created

clusterrolebinding.rbac.authorization.k8s.io/rook-csi-rbd-provisioner-sa-psp created

clusterrolebinding.rbac.authorization.k8s.io/rbd-csi-nodeplugin created

clusterrolebinding.rbac.authorization.k8s.io/rbd-csi-provisioner-role created

# kubectl create -f operator.yaml

configmap/rook-ceph-operator-config created

deployment.apps/rook-ceph-operator created

# vim cluster.yaml

更改storage(自己指定使用磁盘的节点)

原配置

storage: # cluster level storage configuration and selection

useAllNodes: true

useAllDevices: true

更改为:

storage: # cluster level storage configuration and selection

useAllNodes: false

useAllDevices: false

deviceFilter: "sdb"

config:

nodes:

- name: "master"

devices:

- name: "sdb"

- name: "node1"

devices:

- name: "sdb"

- name: "node2"

devices:

- name: "sdb"

# kubectl create -f cluster.yaml

cephcluster.ceph.rook.io/rook-ceph created

注:如果调整了cluster.yaml参数,可执行kubectl apply -f cluster.yaml重新配置ceph集群。

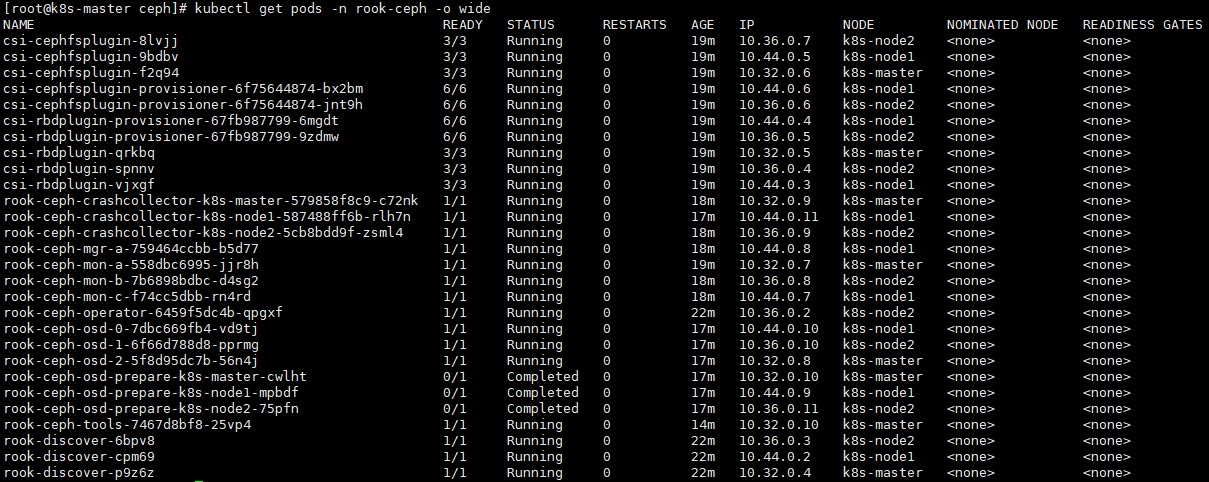

# kubectl get pods -n rook-ceph -o wide

查看日志:

# kubectl logs -n rook-ceph rook-ceph-operator-6459f5dc4b-qpgxf注:

<1>. 三个节点rook-ceph-osd-prepare的正常状态为Completed

<2>. 如果其中一个为Running或缺少rook-ceph-osd节点,注意检查异常节点的时间,防火墙,内存使用情况等。

3>. 部署Toolbox工具

Rook工具箱是一个容器,其中包含用于rook调试和测试的常用工具。

# kubectl create -f toolbox.yaml

deployment.apps/rook-ceph-tools created将会部署一个rook-ceph-tools-xxx-xxx的pod

测试Rook:

一旦 toolbox 的 Pod 运行成功后,我们就可以使用下面的命令进入到工具箱内部进行操作:

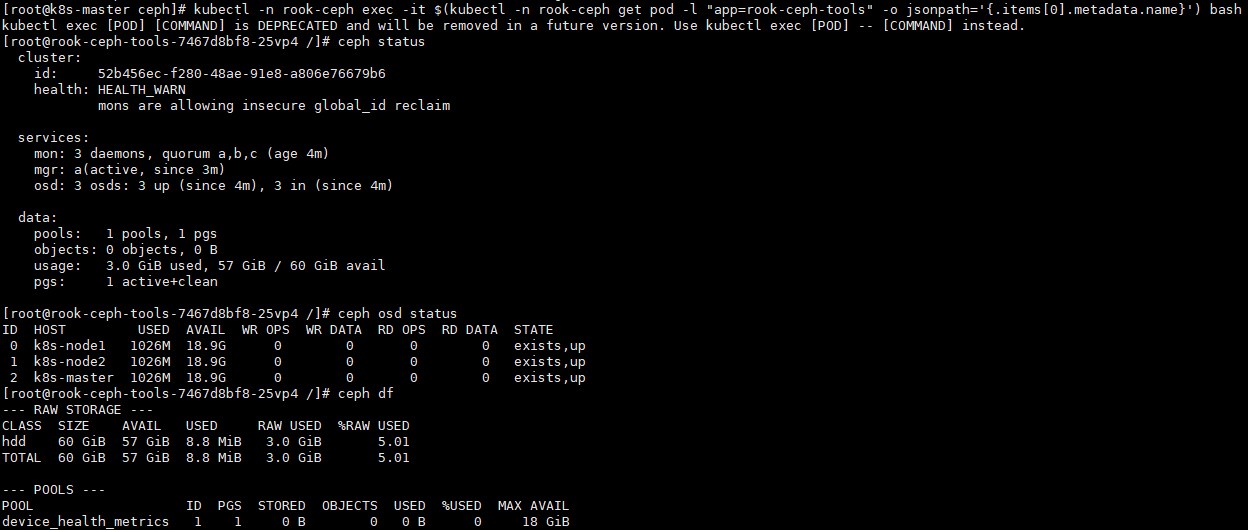

# kubectl -n rook-ceph exec -it $(kubectl -n rook-ceph get pod -l "app=rook-ceph-tools" -o jsonpath='{.items[0].metadata.name}') bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.比如现在我们要查看集群的状态,需要满足下面的条件才认为是健康的:

<1>. 所有 mons 应该达到法定数量

<2>. mgr 应该是激活状态

<3>. 至少有一个 OSD 处于激活状态

<4>. 如果不是 HEALTH_OK 状态,则应该查看告警或者错误信息

查看rook状态:ceph status

[root@rook-ceph-tools-7467d8bf8-25vp4 /]# ceph status

查看osd状态:ceph osd status

[root@rook-ceph-tools-7467d8bf8-25vp4 /]# ceph osd status

查看存储状态:ceph df

[root@rook-ceph-tools-7467d8bf8-25vp4 /]# ceph df

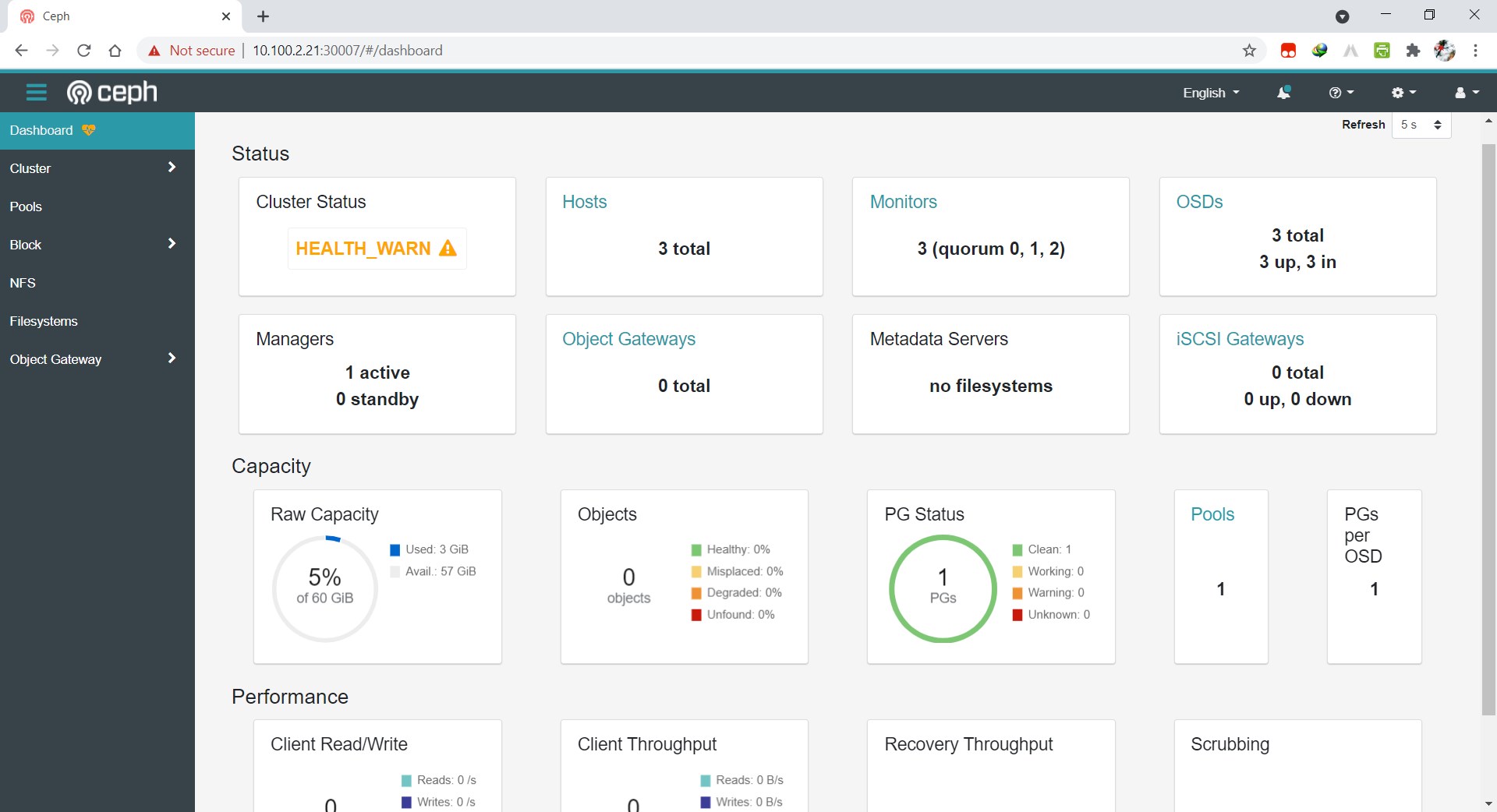

4>. 配置Rook Dashboard

修改固定访问端口:

# vim dashboard-external-https.yaml

spec:

ports:

- name: dashboard

port: 8443

protocol: TCP

targetPort: 8443

nodePort: 30007 # 固定端口访问

部署Dashboard:

# kubectl create -f dashboard-external-https.yaml

service/rook-ceph-mgr-dashboard-external-https created

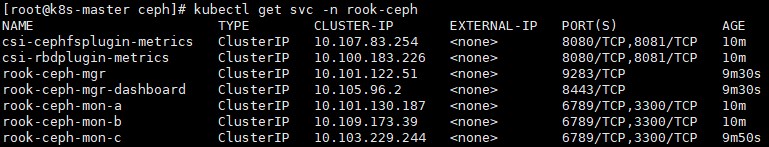

# kubectl get svc -n rook-ceph

登录验证:

登录 dashboard 需要安全访问。Rook 在运行 Rook Ceph 集群的名称空间中创建一个默认用户,admin 并生成一个称为的秘密rook-ceph-dashboard-admin-password。

要检索生成的密码,可以运行以下命令:

# kubectl -n rook-ceph get secret rook-ceph-dashboard-password -o jsonpath="{['data']['password']}" | base64 --decode && echo

x:eF:c\YCb9!"D%h|;le访问https://10.100.2.21:30007 任意Nodeip

输入用户名:admin,密码:(以上获取的密码),登录成功。

5>. 部署Ceph块存储

https://www.cnblogs.com/deny/p/14229987.html

6>. 部署文件系统

配置Ceph 块存储应用

部署应用

Kubernetes 跟 Docker 等很多项目最大的不同,就在于它不推荐你使用命令行的方式直接运行容器(虽然 Kubernetes 项目也支持这种方式,比如:kubectl run),而是希望你用 YAML 文件的方式,即:把容器的定义、参数、配置,统统记录在一个 YAML 文件中,然后用这样一句指令把它运行起来:

# kubectl create -f 我的配置文件例如:

# vim nginx-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 2

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80这个 YAML 文件中的 Kind 字段,指定了这个 API 对象的类型(Type),是一个 Deployment。

所谓 Deployment,是一个定义多副本应用(即多个副本 Pod)的对象,Deployment 还负责在 Pod 定义发生变化时,对每个副本进行滚动更新(Rolling Update)。

在上面这个 YAML 文件中,给它定义的 Pod 副本个数 (spec.replicas) 是:2。Pod 模版(spec.template),描述了想要创建的 Pod 的细节。这个 Pod 里只有一个容器,这个容器的镜像(spec.containers.image)是 nginx:1.7.9,这个容器监听端口(containerPort)是 80。

可以使用 kubectl create 指令创建这个应用:

# kubectl create -f nginx-deployment.yaml

deployment.apps/nginx-deployment created # 创建两个nginx pod

修改副本个数为3,再应用YAML文件:

# kubectl apply -f nginx-deployment.yaml # 再创建第三个nginx pod

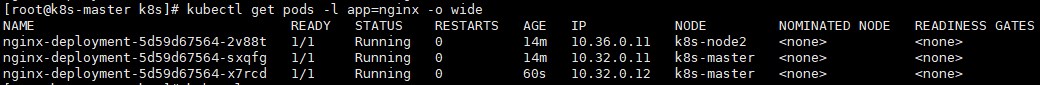

通过 kubectl get 命令检查这个 YAML 运行起来的状态:

# kubectl get pods -l app=nginx -o wide

注: -l 参数,表示获取所有匹配 app: nginx 标签的 Pod。需要注意的是,在命令行中,所有 key-value 格式的参数,都使用“=”而非“:”表示。

可以使用 kubectl describe 命令,查看一个 API 对象的细节:

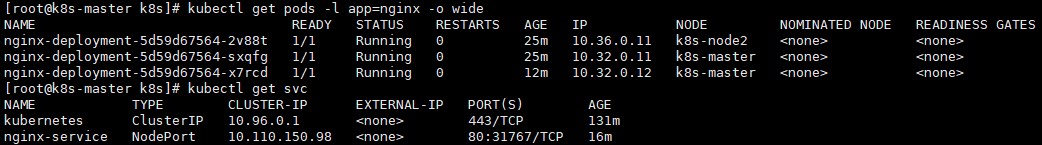

# kubectl describe pod nginx-deployment-5d59d67564-2v88t为该deployment创建nodeport类型的service:

# kubectl expose deployment nginx-deployment --type=NodePort --name=nginx-service

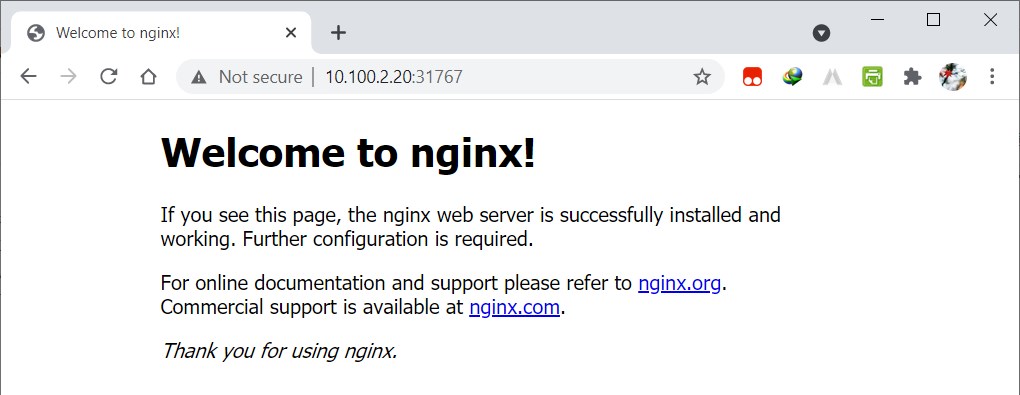

service/nginx-service exposed查看pod运行状态,并在集群外使用nodepod访问nginx 服务:

使用任意节点的ip地址从集群外访问nginx:

http://10.100.2.20:31767

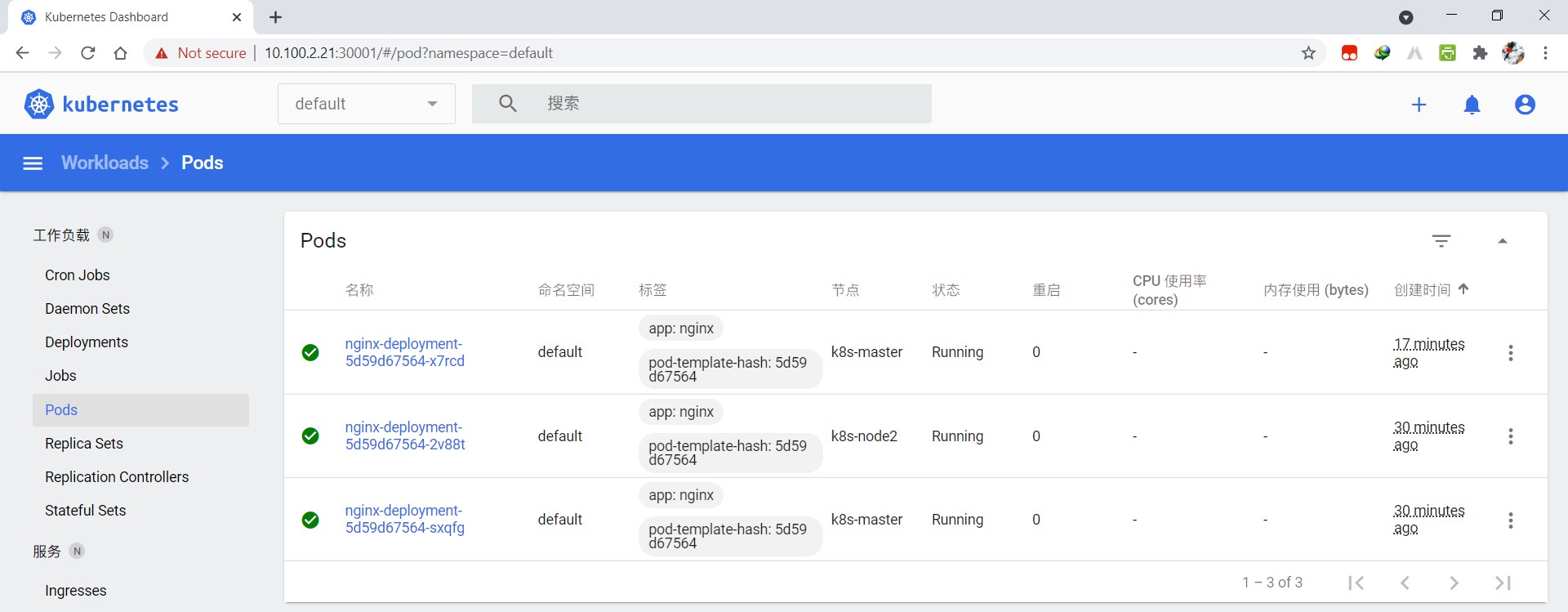

登录dashboard查看pods:

最后更新于 2021-10-08 21:33:50 并被添加「k8s kubeadm」标签,已有 2642 位童鞋阅读过。

本站使用「署名 4.0 国际」创作共享协议,可自由转载、引用,但需署名作者且注明文章出处