使用二进制文件部署高可用K8S集群

1、架构和环境(五台虚拟机)

1>. 部署架构

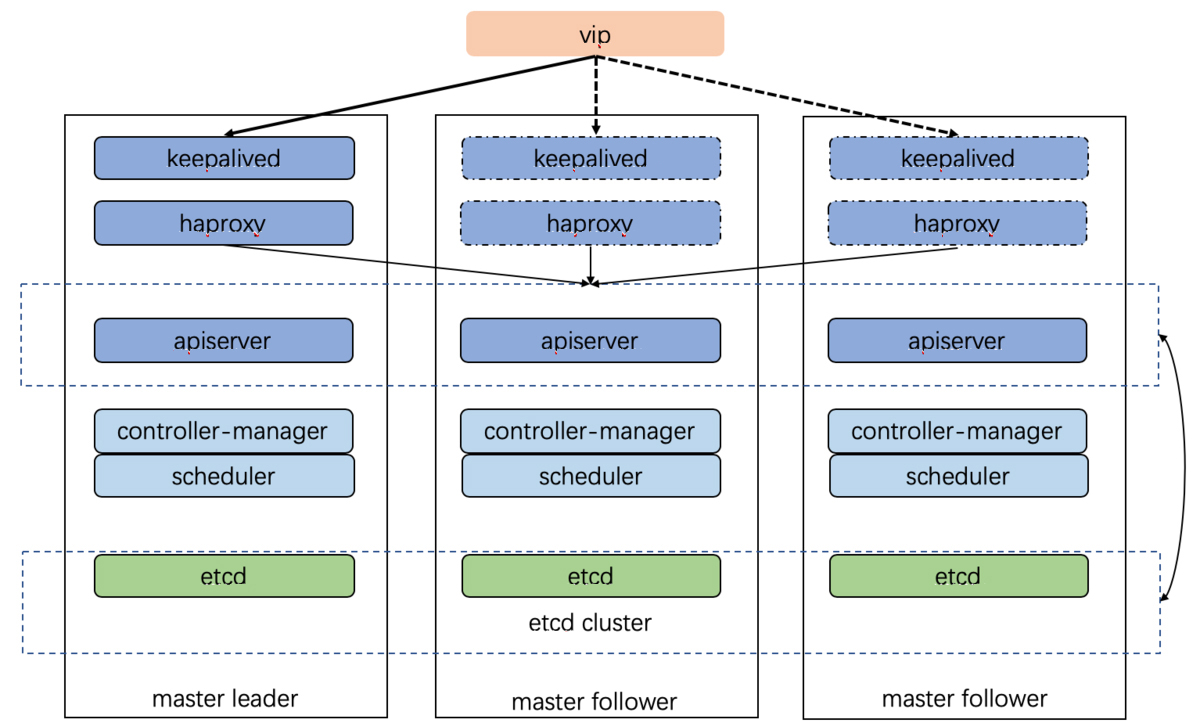

K8S高可用,就是对K8S各核心组件做高可用。

apiversion高可用:通过haproxy+keepalived的方式实现;

controller-manager高可用:通过k8s内部通过选举方式产生领导者(由--leader-elect 选型控制,默认为true),同一时刻集群内只有一个controller-manager组件运行;

scheduler高可用:通过k8s内部通过选举方式产生领导者(由--leader-elect 选型控制,默认为true),同一时刻集群内只有一个scheduler组件运行;

etcd高可用:有两种实现方式(堆叠式和外置式),建议使用外置式etcd。

Master高可用架构:

1) 由外部负载均衡器提供一个vip,流量负载到keepalived master节点上。

2) 当keepalived节点出现故障, vip自动漂到其他可用节点。

3) haproxy负责将流量负载到apiserver节点。

4) 三个apiserver会同时工作。注意:k8s中controller-manager和scheduler只会有一个工作,其余处于backup状态。apiserver是k8s中最繁忙的组件,多个同时工作有利于减轻压力。而controller-manager和scheduler主要处理执行逻辑。

ETCD高可用架构:

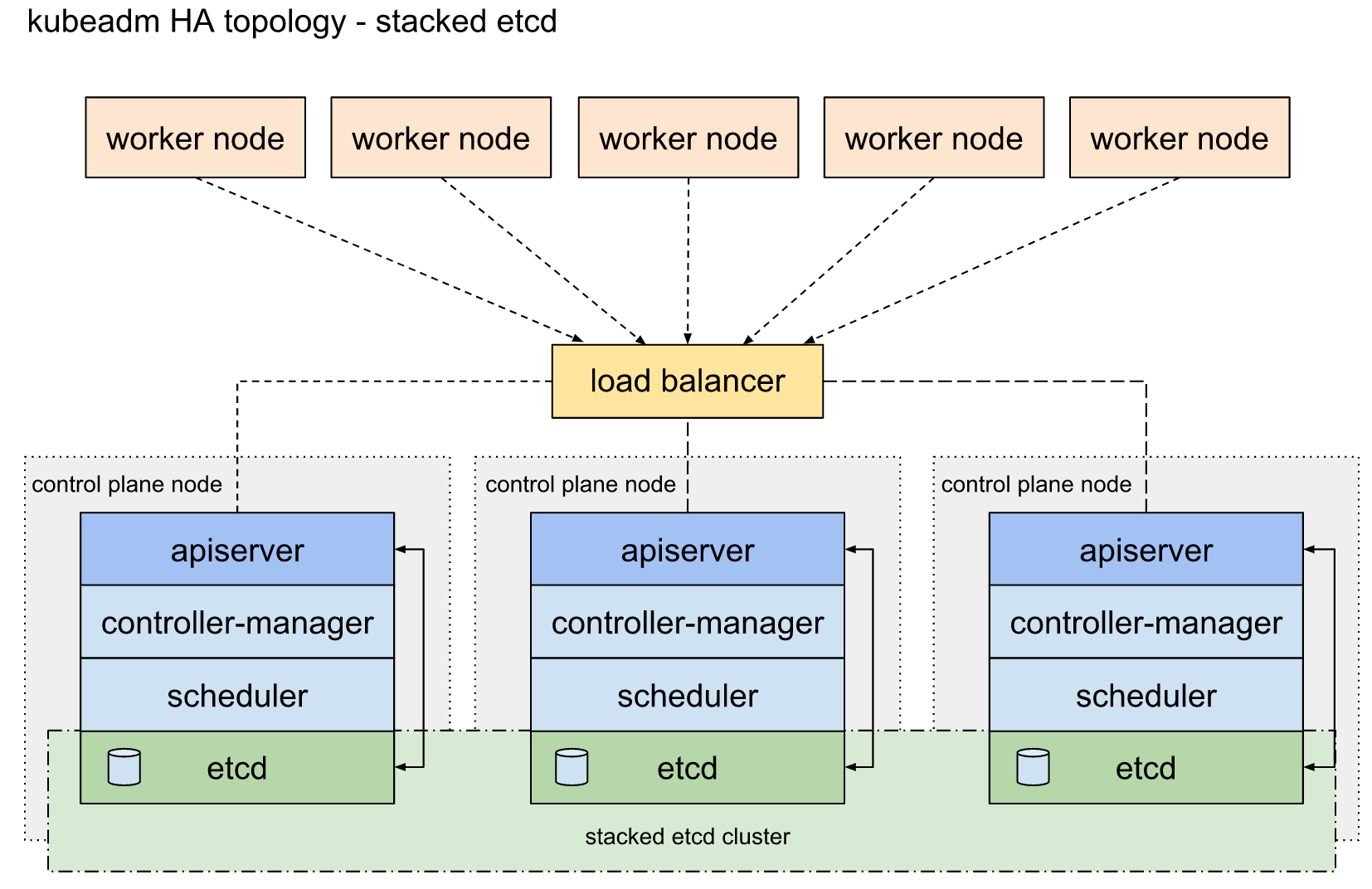

堆叠ETCD:每个master节点上运行一个apiserver和etcd, etcd只与本节点apiserver通信。该方案对基础设施的要求较低,对故障的应对能力也较低。

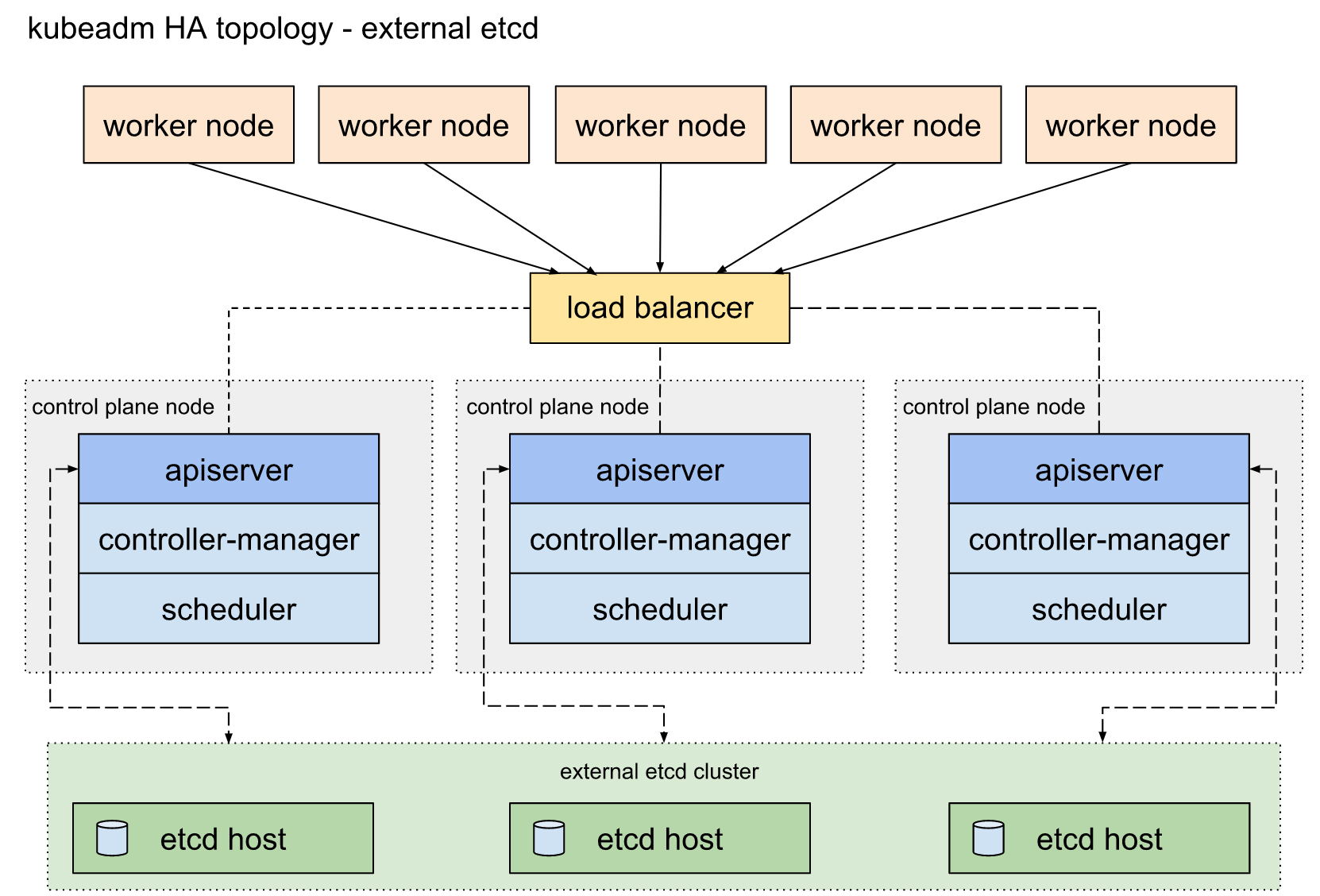

外部ETCD: etcd集群运行在单独的主机上,etcd服务和控制平面被分离,每个etcd都与apiserver节点通信,需要更多的硬件,也有更好的保障能力,当然也可以部署在已部署kubeadm的服务器上,只是在架构上实现了分离,不是所谓的硬件层面分离,建议在硬件资源充足的情况下尽可能选择空闲节点来部署。

部署环境:

主机名 IP 节点类型 配置 安装的组件

k8s-master1 10.100.2.31 master C2_3G docker、etcd、kube-apiserver、kube-controller-manager、kube-scheduler、haproxy、keepalived

k8s-master2 10.100.2.32 master C2_3G docker、etcd、kube-apiserver、kube-controller-manager、kube-scheduler、haproxy、keepalived

k8s-master3 10.100.2.33 master C2_3G docker、etcd、kube-apiserver、kube-controller-manager、kube-scheduler、haproxy、keepalived

k8s-node1 10.100.2.34 node C2_3G docker、kubelet、kube-proxy

k8s-node2 10.100.2.35 node C2_3G docker、kubelet、kube-proxy

2>. 部署版本

CentOS7.9

etcd: v3.5.0 下载地址:https://github.com/etcd-io/etcd/releases

kubernetes:v1.19.14 下载地址:https://github.com/kubernetes/kubernetes/releases

选择要安装的版本,点击"CHANGLOG"跳转到二进制文件下载页面:

Server Binaries:https://dl.k8s.io/v1.19.14/kubernetes-server-linux-amd64.tar.gz

Node Binaries:https://dl.k8s.io/v1.19.14/kubernetes-node-linux-amd64.tar.gz

Client Binaries:https://dl.k8s.io/v1.19.14/kubernetes-client-linux-amd64.tar.gz

Docker:CE 20.10.8 https://hub.docker.com/_/docker

haproxy:v2.4.4 https://hub.docker.com/r/haproxytech/haproxy-debian

keepalived:v2.0.20 https://hub.docker.com/r/osixia/keepalived

2、部署前准备

1>. 关闭防火墙和selinux

# systemctl disable --now firewalld

# setenforce 0 && sed -i 's/^SELINUX=enforcing$/SELINUX=disabled/' /etc/selinux/config2>. 关闭Swap

# swapoff -a && sed -i '/swap/s/^/#/' /etc/fstab3>. 设置主机名和hosts文件

分别设置各主机名

# hostnamectl set-hostname k8s-master1

# hostnamectl set-hostname k8s-master2

# hostnamectl set-hostname k8s-master3

# hostnamectl set-hostname k8s-node1

# hostnamectl set-hostname k8s-node2修改所有主机hosts文件

# cat >> /etc/hosts << EOF

10.100.2.31 k8s-master1

10.100.2.32 k8s-master2

10.100.2.33 k8s-master3 reg.k8stest.com # docker镜像仓库

10.100.2.34 k8s-node1

10.100.2.35 k8s-node2

EOF配置k8s-master1到其他节点的免密登录:

# ssh-keygen -t rsa

# ssh-copy-id -i /root/.ssh/id_rsa.pub root@k8s-master2

# ssh-copy-id -i /root/.ssh/id_rsa.pub root@k8s-master3

# ssh-copy-id -i /root/.ssh/id_rsa.pub root@k8s-node1

# ssh-copy-id -i /root/.ssh/id_rsa.pub root@k8s-node24>. 修改sysctl.conf

# cat > /etc/sysctl.d/k8s.conf << EOF

net.ipv4.ip_forward = 1

net.ipv4.conf.all.forwarding = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

# sysctl -p5>. 配置时间同步

# yum install -y chrony

# systemctl start chronyd

# vim /etc/chrony.conf

server 0.centos.pool.ntp.org iburst # 配置时钟源,自定义修改。

# chronyc -a makestep # 立即同步6>. 安装docker

参考:K8S搭建docker本地镜像仓库

3、配置证书

1>. 创建CA根证书

# k8s-master1节点:

cd /etc/pki/CA/

touch index.txt && echo 01> serial

openssl genrsa -out ca.key 2048

openssl req -new -x509 -nodes -key ca.key -subj "/CN=10.100.2.31" -days 36500 -out ca.crt

将生成的key和crt根证书文件保存到/etc/kubernetes/pki目录

mkdir -p /etc/kubernetes/pki

cp ca.key ca.crt /etc/kubernetes/pki2>. 创建etcd的CA证书

1) 创建x509 v3配置文件:

vim etcd_ssl.cnf

[ req ]

req_extensions = v3_req

distinguished_name = req_distinguished_name

[ req_distinguished_name ]

[ v3_req ]

basicConstraints = CA:FALSE

keyUsage = nonRepudiation, digitalSignature, keyEncipherment

subjectAltName = @alt_names

[ alt_names ]

IP.1 = 10.100.2.31

IP.2 = 10.100.2.32

IP.3 = 10.100.2.332) 创建etcd_server.key和etcd_server.crt:

创建etcd_server私钥:

openssl genrsa -out etcd_server.key 2048创建etcd_server请求文件:

openssl req -new -key etcd_server.key -config etcd_ssl.cnf -subj "/CN=etcd-server" -out etcd_server.csr使用根证书签名:

openssl x509 -req -in etcd_server.csr -CA /etc/kubernetes/pki/ca.crt -CAkey /etc/kubernetes/pki/ca.key -CAcreateserial -days 36500 -extensions v3_req -extfile etcd_ssl.cnf -out etcd_server.crt将生成的key和crt证书文件保存到/etc/etcd/pki目录:

mkdir -p /etc/etcd/pki

cp etcd_server.key etcd_server.crt /etc/etcd/pki3) 创建etcd_client.key和etcd_client.crt:(用于kube-apiserver连接etcd时使用)

创建etcd_client私钥:

openssl genrsa -out etcd_client.key 2048创建etcd_client请求文件:

openssl req -new -key etcd_client.key -config etcd_ssl.cnf -subj "/CN=etcd-client" -out etcd_client.csr使用根证书签名:

openssl x509 -req -in etcd_client.csr -CA /etc/kubernetes/pki/ca.crt -CAkey /etc/kubernetes/pki/ca.key -CAcreateserial -days 36500 -extensions v3_req -extfile etcd_ssl.cnf -out etcd_client.crt将生成的key和crt证书文件保存到/etc/etcd/pki目录:

cp etcd_client.key etcd_client.crt /etc/etcd/pki4) 拷贝ca.crt和etcd证书到k8s-master2和k8s-master3节点:

ssh k8s-master2

mkdir -p /etc/kubernetes/pki /etc/etcd/pki

exit

ssh k8s-master3

mkdir -p /etc/kubernetes/pki /etc/etcd/pki

exit

scp /etc/kubernetes/pki/ca.crt k8s-master2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/ca.crt k8s-master3:/etc/kubernetes/pki/

scp /etc/etcd/pki/etcd_* k8s-master2:/etc/etcd/pki/

scp /etc/etcd/pki/etcd_* k8s-master3:/etc/etcd/pki/3>. 创建kube-apiserver的CA证书

1) 创建x509 v3配置文件:

vim master_ssl.cnf

[ req ]

req_extensions = v3_req

distinguished_name = req_distinguished_name

[ req_distinguished_name ]

[ v3_req ]

basicConstraints = CA:FALSE

keyUsage = nonRepudiation, digitalSignature, keyEncipherment

subjectAltName = @alt_names

[ alt_names ]

DNS.1 = kubernetes

DNS.2 = kubernetes.default

DNS.3 = kubernetes.default.svc

DNS.4 = kubernetes.default.svc.cluster.local

DNS.5 = k8s-master1

DNS.6 = k8s-master2

DNS.7 = k8s-master3

IP.1 = 172.16.0.1

IP.2 = 10.100.2.31

IP.3 = 10.100.2.32

IP.4 = 10.100.2.33

IP.5 = 10.100.2.1002) 创建apiserver.key和apiserver.crt:

创建apiserver私钥:

openssl genrsa -out apiserver.key 2048创建apiserver请求文件:

openssl req -new -key apiserver.key -config master_ssl.cnf -subj "/CN=10.100.2.31" -out apiserver.csr使用根证书签名:

openssl x509 -req -in apiserver.csr -CA ca.crt -CAkey ca.key -CAcreateserial -days 36500 -extensions v3_req -extfile master_ssl.cnf -out apiserver.crt将生成的key和crt证书文件保存到/etc/kubernetes/pki目录:

cp apiserver.crt apiserver.key /etc/kubernetes/pki/3) 拷贝apiserver.crt和apiserver.key证书到k8s-master2和k8s-master3节点:

scp apiserver.crt apiserver.key k8s-master2:/etc/kubernetes/pki/

scp apiserver.crt apiserver.key k8s-master3:/etc/kubernetes/pki/4>. 创建客户端连接kube-apiserver服务所需的证书:

kube-controller-manager、kube-scheduler、kubelet和kube-proxy服务作为客户端连接kube-apiserver服务,需要CA证书进行访问。

对这几个服务创建一个统一共用的证书:

1) 创建ca证书和key私钥文件:

创建client私钥:

openssl genrsa -out client.key 2048创建client请求文件:

openssl req -new -key client.key -subj "/CN=admin" -out client.csr

# 其中-subj参数中"/CN"的名称可以被设置为"admin",用于标识连接kube-apiserver的客户端用户的名称。使用根证书签名:

openssl x509 -req -in client.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out client.crt -days 36500将生成的key和crt证书文件保存到/etc/kubernetes/pki目录:

cp client.crt client.key /etc/kubernetes/pki/2) 拷贝client.crt和client.key证书到k8s-master2和k8s-master3以及Node节点:

scp client.crt client.key k8s-master2:/etc/kubernetes/pki/

scp client.crt client.key k8s-master3:/etc/kubernetes/pki/

后续加入Node节点时,还需要将证书文件拷贝到node节点相同的目录:

ssh k8s-node1

mkdir -p /etc/kubernetes/pki

exit

ssh k8s-node2

mkdir -p /etc/kubernetes/pki

exit

scp client.crt client.key k8s-node1:/etc/kubernetes/pki/

scp client.crt client.key k8s-node2:/etc/kubernetes/pki/4、部署etcd高可用集群

1>. 解压缩etcd二进制包,并把etcd和etcdctl文件拷贝到/usr/bin目录:

tar zxvf etcd-v3.5.0-linux-amd64.tar.gz

cd etcd-v3.5.0-linux-amd64/

cp etcd etcdctl /usr/bin/

拷贝到k8s-master2和k8s-master3节点:

scp etcd etcdctl k8s-master2:/usr/bin/

scp etcd etcdctl k8s-master3:/usr/bin/2>. 创建systemd服务配置文件:

vim /usr/lib/systemd/system/etcd.service

[Unit]

Description=etcd key-value store

Documentation=https://github.com/etcd-io/etcd

After=network.target

[Service]

EnvironmentFile=/etc/etcd/etcd.conf

ExecStart=/usr/bin/etcd

Restart=always

[Install]

WantedBy=multi-user.target拷贝systemd启动文件到k8s-master2和k8s-master3:

cd /usr/lib/systemd/system

scp etcd.service k8s-master2:/usr/lib/systemd/system

scp etcd.service k8s-master3:/usr/lib/systemd/system3>. 创建etcd配置文件:

vim /etc/etcd/etcd.conf

# etcd1 config

ETCD_NAME=etcd1

ETCD_DATA_DIR=/etc/etcd/data

ETCD_CERT_FILE=/etc/etcd/pki/etcd_server.crt

ETCD_KEY_FILE=/etc/etcd/pki/etcd_server.key

ETCD_TRUSTED_CA_FILE=/etc/kubernetes/pki/ca.crt

ETCD_CLIENT_CERT_AUTH=true

ETCD_LISTEN_CLIENT_URLS=https://10.100.2.31:2379

ETCD_ADVERTISE_CLIENT_URLS=https://10.100.2.31:2379

ETCD_PEER_CERT_FILE=/etc/etcd/pki/etcd_server.crt

ETCD_PEER_KEY_FILE=/etc/etcd/pki/etcd_server.key

ETCD_PEER_TRUSTED_CA_FILE=/etc/kubernetes/pki/ca.crt

ETCD_LISTEN_PEER_URLS=https://10.100.2.31:2380

ETCD_INITIAL_ADVERTISE_PEER_URLS=https://10.100.2.31:2380

ETCD_INITIAL_CLUSTER_TOKEN=etcd-cluster

ETCD_INITIAL_CLUSTER="etcd1=https://10.100.2.31:2380,etcd2=https://10.100.2.32:2380,etcd3=https://10.100.2.33:2380"

ETCD_INITIAL_CLUSTER_STATE=new拷贝配置文件到其他两个节点:

scp /etc/etcd/etcd.conf k8s-master2:/etc/etcd/

scp /etc/etcd/etcd.conf k8s-master3:/etc/etcd/

注意修改:

1) 修改ETCD_NAME=etcd1为对应的etcd2和etcd3

2) 修改etcd2和etcd3对应的IP地址4>. 启动etcd集群:

分别在三台master节点上启动etcd服务:

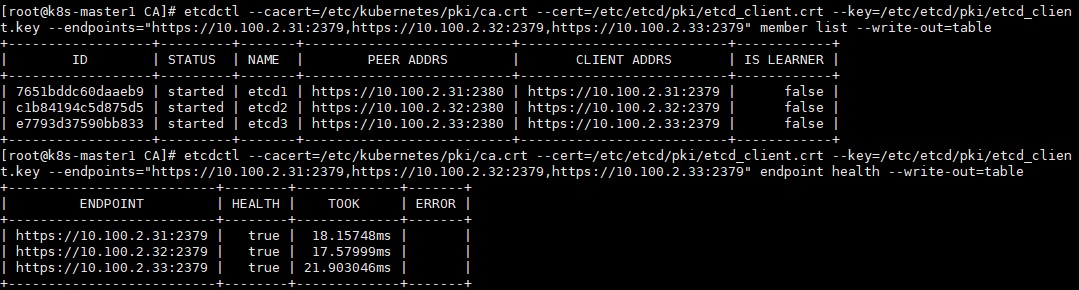

systemctl restart etcd && systemctl enable etcd验证etcd集群状态:

etcdctl --cacert=/etc/kubernetes/pki/ca.crt --cert=/etc/etcd/pki/etcd_client.crt --key=/etc/etcd/pki/etcd_client.key --endpoints="https://10.100.2.31:2379,https://10.100.2.32:2379,https://10.100.2.33:2379" member list --write-out=table

etcdctl --cacert=/etc/kubernetes/pki/ca.crt --cert=/etc/etcd/pki/etcd_client.crt --key=/etc/etcd/pki/etcd_client.key --endpoints="https://10.100.2.31:2379,https://10.100.2.32:2379,https://10.100.2.33:2379" endpoint health --write-out=table

5、部署kubernetes高可用集群

1>. 解压缩kubernetes-server-linux-amd64.tar.gz包,把二进制相关文件拷贝到/usr/bin目录:

包括kube-apiserver、kube-controller-manager、kube-scheduler、kubeadm、kubectl、kubelet、kube-proxy、kube-aggregator、kubectl-convert、apiextensions-apiserver、mounter。

tar zxvf kubernetes-server-linux-amd64.tar.gz

cd kubernetes/server/bin/

cp kube-apiserver kube-controller-manager kube-scheduler kubeadm kubectl kubelet kube-proxy kube-aggregator kubectl-convert apiextensions-apiserver mounter /usr/bin/拷贝到k8s-master2和k8s-master3节点:

scp kube-apiserver kube-controller-manager kube-scheduler kubeadm kubectl kubelet kube-proxy kube-aggregator kubectl-convert apiextensions-apiserver mounter k8s-master2:/usr/bin/

scp kube-apiserver kube-controller-manager kube-scheduler kubeadm kubectl kubelet kube-proxy kube-aggregator kubectl-convert apiextensions-apiserver mounter k8s-master3:/usr/bin/2>. 部署kube-apiserver服务

1) 创建systemd服务配置文件:

vim /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/etc/kubernetes/apiserver

ExecStart=/usr/bin/kube-apiserver $KUBE_API_ARGS

Restart=always

[Install]

WantedBy=multi-user.target拷贝systemd启动文件到k8s-master2和k8s-master3:

cd /usr/lib/systemd/system

scp kube-apiserver.service k8s-master2:/usr/lib/systemd/system/

scp kube-apiserver.service k8s-master3:/usr/lib/systemd/system/2) 创建apiserver配置文件:

vim /etc/kubernetes/apiserver

KUBE_API_ARGS="--insecure-port=0 \

--secure-port=6443 \

--tls-cert-file=/etc/kubernetes/pki/apiserver.crt \

--tls-private-key-file=/etc/kubernetes/pki/apiserver.key \

--client-ca-file=/etc/kubernetes/pki/ca.crt \

--apiserver-count=3 --endpoint-reconciler-type=master-count \

--etcd-servers=https://10.100.2.31:2379,https://10.100.2.32:2379,https://10.100.2.33:2379 \

--etcd-cafile=/etc/kubernetes/pki/ca.crt \

--etcd-certfile=/etc/etcd/pki/etcd_client.crt \

--etcd-keyfile=/etc/etcd/pki/etcd_client.key \

--service-cluster-ip-range=172.16.0.0/16 \

--service-node-port-range=30000-32767 \

--allow-privileged=true \

--logtostderr=false --log-dir=/var/log/kubernetes --v=0"拷贝配置文件到其他两个master节点:

scp /etc/kubernetes/apiserver k8s-master2:/etc/kubernetes/

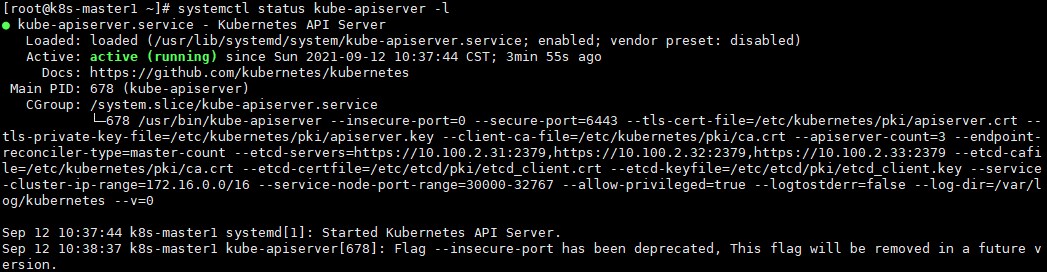

scp /etc/kubernetes/apiserver k8s-master3:/etc/kubernetes/3) 在3台主机上分别启动kube-apiserver服务,并设置为开机自启动:

systemctl enable kube-apiserver --now启动报错:

kube-apiserver: Error: [service-account-issuer is a required flag, --service-account-signing-key-file and --service-account-issuer are required flags]

原因:Kubernetes 官方发布公告,宣布自 v1.20 起放弃对 Docker 的支持,届时用户将收到 Docker 弃用警告,并需要改用其他容器运行时。

一开始测试时使用的K8S版本为v1.22.1,所以报错,改为v1.19版本正常。

https://github.com/kelseyhightower/kubernetes-the-hard-way/issues/626

https://blog.csdn.net/weixin_45727359/article/details/111189256

http://dockone.io/article/450984

https://zhuanlan.zhihu.com/p/334787180

4) 创建客户端连接kube-apiserver服务所需的kubeconfig配置文件:

为kube-controller-manager、kube-scheduler、kubelet和kube-proxy服务统一创建一个kubeconfig文件,作为连接kube-apiserver服务的配置文件。也作为kubectl命令行工具连接kube-apiserver服务的配置文件。

vim /etc/kubernetes/kubeconfig

apiVersion: v1

kind: Config

clusters:

- name: default

cluster:

server: https://10.100.2.100:9443

certificate-authority: /etc/kubernetes/pki/ca.crt

users:

- name: admin

user:

client-certificate: /etc/kubernetes/pki/client.crt

client-key: /etc/kubernetes/pki/client.key

contexts:

- context:

cluster: default

user: admin

name: default

current-context: default拷贝配置文件到其他两个master节点,以及所有node节点:

scp /etc/kubernetes/kubeconfig k8s-master2:/etc/kubernetes/

scp /etc/kubernetes/kubeconfig k8s-master3:/etc/kubernetes/

scp /etc/kubernetes/kubeconfig k8s-node1:/etc/kubernetes/

scp /etc/kubernetes/kubeconfig k8s-node2:/etc/kubernetes/3>. 部署kube-controller-manager服务

1) 创建systemd服务配置文件:

vim /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/etc/kubernetes/controller-manager

ExecStart=/usr/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_ARGS

Restart=always

[Install]

WantedBy=multi-user.target拷贝systemd启动文件到k8s-master2和k8s-master3:

cd /usr/lib/systemd/system

scp kube-controller-manager.service k8s-master2:/usr/lib/systemd/system/

scp kube-controller-manager.service k8s-master3:/usr/lib/systemd/system/2) 创建kube-controller-manager启动配置文件:

vim /etc/kubernetes/controller-manager

KUBE_CONTROLLER_MANAGER_ARGS="--kubeconfig=/etc/kubernetes/kubeconfig \

--leader-elect=true \

--service-cluster-ip-range=172.16.0.0/16 \

--service-account-private-key-file=/etc/kubernetes/pki/apiserver.key \

--root-ca-file=/etc/kubernetes/pki/ca.crt \

--logtostderr=false --log-dir=/var/log/kubernetes --v=0"拷贝配置文件到其他两个master节点:

scp /etc/kubernetes/controller-manager k8s-master2:/etc/kubernetes/

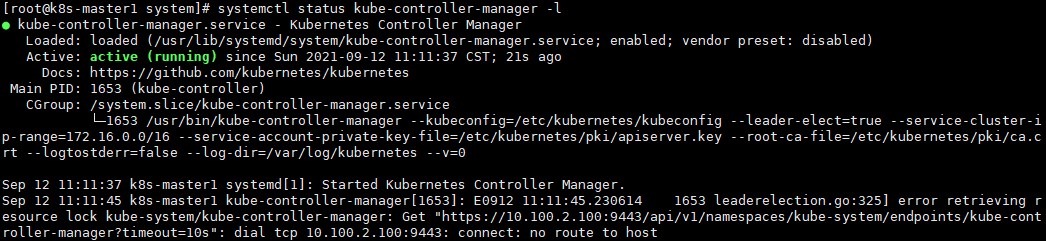

scp /etc/kubernetes/controller-manager k8s-master3:/etc/kubernetes/3) 在3台master主机上分别启动kube-controller-manager服务,并设置为开机自启动:

systemctl enable kube-controller-manager --now

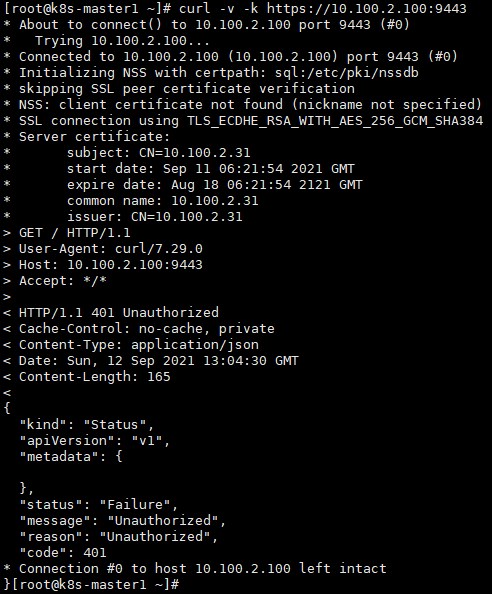

提示:"dial tcp 10.100.2.100:9443: connect: no route to host",是因为vip还没配置。

4>. 部署kube-scheduler服务

1) 创建systemd服务配置文件:

vim /usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/etc/kubernetes/scheduler

ExecStart=/usr/bin/kube-scheduler $KUBE_SCHEDULER_ARGS

Restart=always

[Install]

WantedBy=multi-user.target拷贝systemd启动文件到其他2个master节点:

scp kube-scheduler.service k8s-master2:/usr/lib/systemd/system/

scp kube-scheduler.service k8s-master3:/usr/lib/systemd/system/2) 创建kube-scheduler启动配置文件:

vim /etc/kubernetes/scheduler

KUBE_SCHEDULER_ARGS="--kubeconfig=/etc/kubernetes/kubeconfig \

--leader-elect=true \

--logtostderr=false --log-dir=/var/log/kubernetes --v=0"拷贝配置文件到其他两个master节点:

scp /etc/kubernetes/scheduler k8s-master2:/etc/kubernetes/

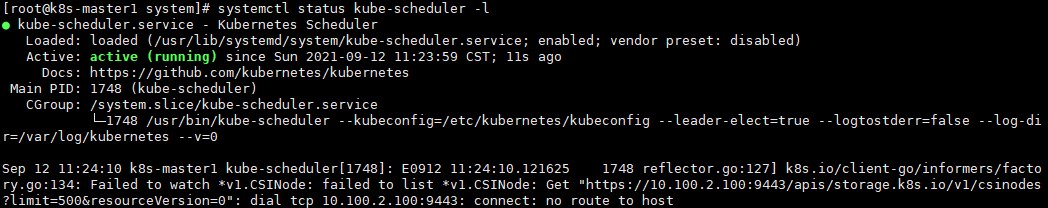

scp /etc/kubernetes/scheduler k8s-master3:/etc/kubernetes/3) 在3台master主机上分别启动kube-scheduler服务,并设置为开机自启动:

systemctl enable --now kube-scheduler

6、部署HAProxy和keepalived高可用负载均衡器

HAProxy负责将客户端请求转发到后端的3个kube-apiserver实例上,keepalived负责维护VIP的高可用。

1>. 部署三个haproxy实例

haproxy提供高可用性,负载均衡,基于TCP和HTTP的代理,支持数以万记的并发连接。https://github.com/haproxy/haproxy

haproxy可安装在主机上,也可使用docker容器实现。以下使用容器安装:

1) 创建haproxy的配置文件haproxy.cfg:

mkdir /etc/haproxy # 三个master节点都要配置

vim /etc/haproxy/haproxy.cfg

global

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 20000

user haproxy

group haproxy

daemon

# turn on stats unix socket

stats socket /var/lib/haproxy/stats

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

frontend kube-apiserver

mode tcp

bind *:9443 # 监听9443端口

option tcplog

default_backend kube-apiserver

backend kube-apiserver

mode tcp

balance roundrobin # 采用轮询的负载算法

server k8s-master1 10.100.2.31:6443 check

server k8s-master2 10.100.2.32:6443 check

server k8s-master3 10.100.2.33:6443 check

listen status

mode http

bind *:8888

stats auth admin:password

stats refresh 5s

stats realm HAProxy\ Statistics

stats uri /stats

log 127.0.0.1 local3 err拷贝配置文件到其他两个master节点:

scp /etc/haproxy/haproxy.cfg k8s-master2:/etc/haproxy/

scp /etc/haproxy/haproxy.cfg k8s-master3:/etc/haproxy/2) 在docker registry仓库上传镜像

使用harbor搭建的本地镜像仓库,从官网找到要使用的haproxy镜像版本。

docker login -u admin http://reg.k8stest.com

docker pull haproxytech/haproxy-debian

docker pull osixia/keepalived

docker image inspect haproxytech/haproxy-debian:latest|grep -i version # "Version": "2.4.4"

通过官网查到keepalived具体版本:2.0.20 # https://hub.docker.com/r/osixia/keepalived

docker tag haproxytech/haproxy-debian:latest reg.k8stest.com/testproj/haproxy:v2.4.4

docker tag osixia/keepalived:latest reg.k8stest.com/testproj/keepalived:v2.0.20

docker push reg.k8stest.com/testproj/haproxy:v2.4.4

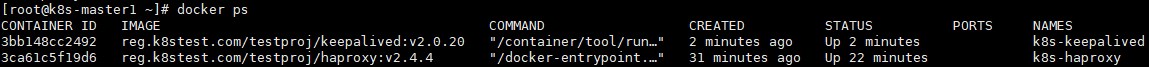

docker push reg.k8stest.com/testproj/keepalived:v2.0.203) 分别在三个节点启动haproxy

配置镜像拉取地址:

vim /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"insecure-registries": ["reg.k8stest.com"],

"registry-mirrors": ["http://reg.k8stest.com"]

}拷贝到其他节点:

scp /etc/docker/daemon.json k8s-master2:/etc/docker/

scp /etc/docker/daemon.json k8s-node2:/etc/docker/

k8s-master3搭建了harbor镜像仓库,k8s-node1作为客户端测试时已配置。重启docker使配置生效:

systemctl restart docker拉取haproxy启动镜像:

docker pull reg.k8stest.com/testproj/haproxy:v2.4.4启动haproxy服务:

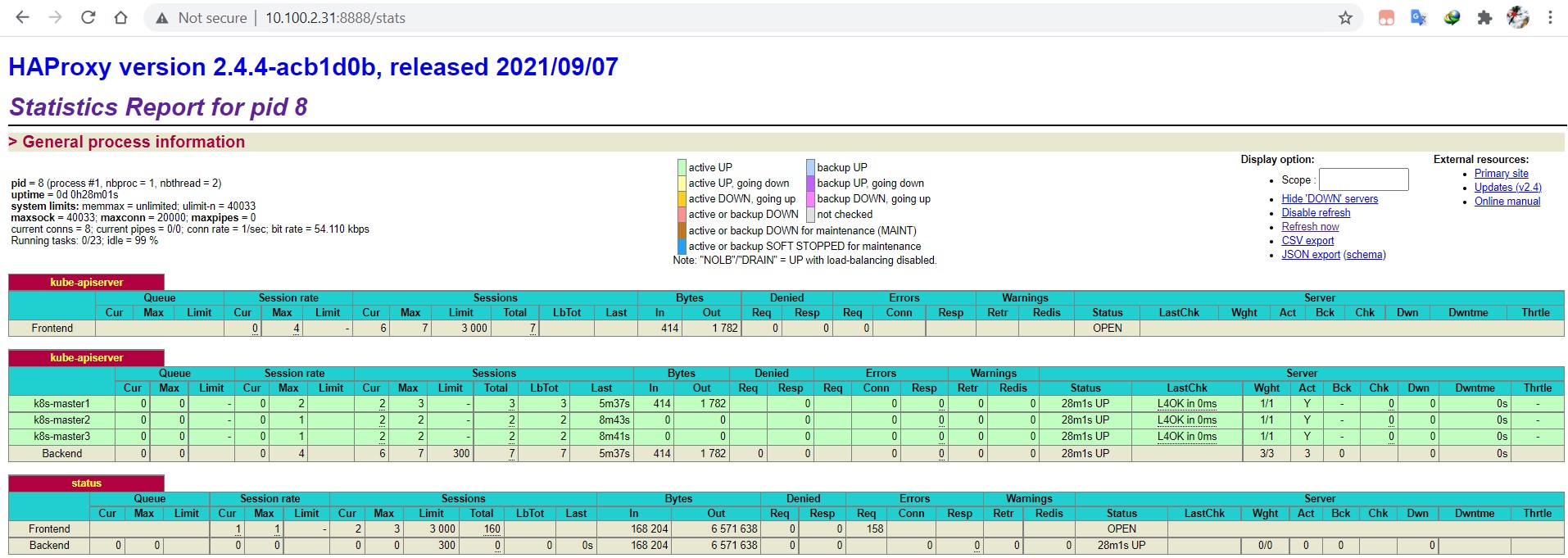

docker run -d --name=k8s-haproxy --net=host --restart=always -v /etc/haproxy:/usr/local/etc/haproxy:ro reg.k8stest.com/testproj/haproxy:v2.4.44) 检查haproxy运行情况

通过浏览器访问:http://10.100.2.31:8888/stats 即可访问管理页面,登录后检查三个节点的status是否为UP。

2>. 部署三个keepalived实例

keepalived是以VRRP(虚拟路由冗余协议)协议为基础, 包括一个master和多个backup。

master劫持vip对外提供服务。master发送组播,backup节点收不到vrrp包时认为master宕机,此时选出剩余优先级最高的节点作为新的master,劫持vip。

keepalived可安装在主机上,也可使用docker容器实现。以下使用容器安装:

1) 创建keepalived的配置文件keepalived.cfg:

vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id LVS_K8S

}

vrrp_script check_haproxy {

#script "/etc/keepalived/check_haproxy.sh" # 通过脚本检测

script "/bin/bash -c 'if [[ $(netstat -nlp | grep 9443) ]]; then exit 0; else exit 1; fi'" # haproxy检测

interval 2 # 每2秒执行一次检测

weight 5 # 权重变化值

}

vrrp_instance VI_1 {

state MASTER # backup节点设为BACKUP

interface ens33 # 网卡名称

virtual_router_id 51 # id设为相同,表示是同一个虚拟路由组

priority 100 # 初始权重

advert_int 1

authentication {

auth_type PASS

auth_pass password

}

track_script {

check_haproxy

}

virtual_ipaddress {

10.100.2.100

}

}拷贝配置文件到其他两个master节点:

scp /etc/keepalived/keepalived.conf k8s-master2:/etc/keepalived/

scp /etc/keepalived/keepalived.conf k8s-master3:/etc/keepalived/

注意:修改state MASTER为BACKUP,priority 100权重值比master1小一些。2) 分别在三台节点启动keepalived:

拉取haproxy启动镜像:

docker login -u admin -p Harbor12345 http://reg.k8stest.com

docker pull reg.k8stest.com/testproj/keepalived:v2.0.20启动keepalived服务:

docker run -d --name=k8s-keepalived --restart=always --net=host \

--cap-add=NET_ADMIN --cap-add=NET_BROADCAST --cap-add=NET_RAW \

-v /etc/keepalived/keepalived.conf:/container/service/keepalived/assets/keepalived.conf \

reg.k8stest.com/testproj/keepalived:v2.0.20 --copy-service

3) 验证haproxy代理vip访问kube-apiserver是否正常:

curl -v -k https://10.100.2.100:9443

7、部署Node服务

注:这里测试把所有master节点也配置为node节点。

1>. 解压缩kubernetes-node-linux-amd64.tar.gz包

把二进制相关文件拷贝到/usr/bin目录,包括kubeadm、kubectl、kubelet、kube-proxy。

tar zxvf kubernetes-node-linux-amd64.tar.gz

cd kubernetes/node/bin/

cp kubeadm kubectl kubelet kube-proxy /usr/bin/

注:三个master节点的server包已经包含了这四个文件,可以省略。拷贝到k8s-node1和k8s-node2节点:

scp kubeadm kubectl kubelet kube-proxy k8s-node1:/usr/bin/

scp kubeadm kubectl kubelet kube-proxy k8s-node2:/usr/bin/2>. 部署kubelet服务

1) 创建systemd服务配置文件:

vim /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet Server

Documentation=https://github.com/kubernetes/kubernetes

After=docker.target

[Service]

EnvironmentFile=/etc/kubernetes/kubelet

ExecStart=/usr/bin/kubelet $KUBELET_ARGS

Restart=always

[Install]

WantedBy=multi-user.target拷贝systemd启动文件到其他node节点:

scp /usr/lib/systemd/system/kubelet.service k8s-master2:/usr/lib/systemd/system/

scp /usr/lib/systemd/system/kubelet.service k8s-master3:/usr/lib/systemd/system/

scp /usr/lib/systemd/system/kubelet.service k8s-node1:/usr/lib/systemd/system/

scp /usr/lib/systemd/system/kubelet.service k8s-node2:/usr/lib/systemd/system/2) 创建kubelet启动配置文件:

vim /etc/kubernetes/kubelet

KUBELET_ARGS="--kubeconfig=/etc/kubernetes/kubeconfig \

--config=/etc/kubernetes/kubelet.config \

--hostname-override=10.100.2.31 \

--network-plugin=cni \

--pod-infra-container-image=reg.k8stest.com/testproj/pause:v3.2 \

--logtostderr=false --log-dir=/var/log/kubernetes --v=0"拷贝配置文件到其他node节点:

scp /etc/kubernetes/kubelet k8s-master2:/etc/kubernetes/

scp /etc/kubernetes/kubelet k8s-master3:/etc/kubernetes/

scp /etc/kubernetes/kubelet k8s-node1:/etc/kubernetes/

scp /etc/kubernetes/kubelet k8s-node2:/etc/kubernetes/

注意:把--hostname-override=10.100.2.31修改为各Node本机的IP地址或域名。3) 创建kubelet配置文件:

vim /etc/kubernetes/kubelet.config

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 0.0.0.0

port: 10250

cgroupDriver: systemd # 默认值cgroupfs

clusterDNS: ["172.16.0.100"]

clusterDomain: cluster.local

authentication:

anonymous:

enabled: true

注:从Kubernetes 1.10开始Dynamic Kubelet Configuration特性进入beta阶段,kubelet的大多数命令行参数都改为推荐在–config指定位置的配置文件中进行配置。拷贝配置文件到其他node节点:

scp /etc/kubernetes/kubelet.config k8s-master2:/etc/kubernetes/

scp /etc/kubernetes/kubelet.config k8s-master3:/etc/kubernetes/

scp /etc/kubernetes/kubelet.config k8s-node1:/etc/kubernetes/

scp /etc/kubernetes/kubelet.config k8s-node2:/etc/kubernetes/4) 在node节点上启动kubelet服务,并设置为开机自启动

systemctl enable --now kubelet3>. 部署kube-proxy服务

1) 创建systemd服务配置文件:

vim /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/kubernetes/kubernetes

After=docker.target

[Service]

EnvironmentFile=/etc/kubernetes/proxy

ExecStart=/usr/bin/kube-proxy $KUBE_PROXY_ARGS

Restart=always

[Install]

WantedBy=multi-user.target拷贝systemd启动文件到其他node节点:

scp /usr/lib/systemd/system/kube-proxy.service k8s-master2:/usr/lib/systemd/system/

scp /usr/lib/systemd/system/kube-proxy.service k8s-master3:/usr/lib/systemd/system/

scp /usr/lib/systemd/system/kube-proxy.service k8s-node1:/usr/lib/systemd/system/

scp /usr/lib/systemd/system/kube-proxy.service k8s-node2:/usr/lib/systemd/system/2) 创建kube-proxy启动配置文件:

vim /etc/kubernetes/proxy

KUBE_PROXY_ARGS="--kubeconfig=/etc/kubernetes/kubeconfig \

--hostname-override=10.100.2.31 \

--proxy-mode=iptables \

--logtostderr=false --log-dir=/var/log/kubernetes --v=0"拷贝配置文件到其他node节点:

scp /etc/kubernetes/proxy k8s-master2:/etc/kubernetes/

scp /etc/kubernetes/proxy k8s-master3:/etc/kubernetes/

scp /etc/kubernetes/proxy k8s-node1:/etc/kubernetes/

scp /etc/kubernetes/proxy k8s-node2:/etc/kubernetes/

注意:把--hostname-override=10.100.2.31修改为各Node本机的IP地址或域名。3) 在node节点上启动kube-proxy服务,并设置为开机自启动:

systemctl enable --now kube-proxy4) 修改路由转发,使用ipvs替代iptables(可选)

kube-proxy服务默认使用iptables,从k8s的1.8版本开始,kube-proxy引入了IPVS模式,IPVS模式与iptables同样基于Netfilter,但是采用的hash表,因此当service数量达到一定规模时,hash查表的速度优势就会显现出来,从而提高service的服务性能。

步骤:

开启ipvs支持:

yum install ipvsadm ipset -y

# 临时生效

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

# 永久生效

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF修改kube-proxy配置:

# vim /etc/kubernetes/proxy

KUBE_PROXY_ARGS="--kubeconfig=/etc/kubernetes/kubeconfig \

--hostname-override=10.100.2.34 \

--proxy-mode=ipvs \

--masquerade-all=true \

--logtostderr=false --log-dir=/var/log/kubernetes --v=0"

修改 --proxy-mode=ipvs,添加 --masquerade-all=true。重启kube-proxy:

# systemctl restart kube-proxy查看ipvs规则:

# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.16.0.1:443 rr

-> 10.100.2.31:6443 Masq 1 2 0

-> 10.100.2.32:6443 Masq 1 1 0

-> 10.100.2.33:6443 Masq 1 0 0

TCP 172.16.0.100:53 rr

-> 192.168.72.203:53 Masq 1 0 0

-> 192.168.139.71:53 Masq 1 0 0

-> 192.168.254.19:53 Masq 1 0 0

TCP 172.16.0.100:9153 rr

-> 192.168.72.203:9153 Masq 1 0 0

-> 192.168.139.71:9153 Masq 1 0 0

-> 192.168.254.19:9153 Masq 1 0 0

UDP 172.16.0.100:53 rr

-> 192.168.72.203:53 Masq 1 0 0

-> 192.168.139.71:53 Masq 1 0 0

-> 192.168.254.19:53 Masq 1 0 0 问题1:kube-proxy提示内核版本过低的错误

# systemctl status kube-proxy -l

Oct 05 20:15:43 k8s-master1 systemd[1]: Started Kubernetes Kube-Proxy Server.

Oct 05 20:15:55 k8s-master1 kube-proxy[689]: E1005 20:15:55.677715 689 proxier.go:381] can’t set sysctl net/ipv4/vs/conn_reuse_mode, kernel version must be at least 4.1

提示内核版本过低,需要升级到4.1以上版本。

升级内核:(测试系统为centos7.9)

下载网站:https://developer.aliyun.com/packageSearch?word=kernel

https://mirrors.aliyun.com/centos/7.9.2009/virt/x86_64/xen-410/Packages/k/kernel-4.9.230-37.el7.x86_64.rpm?spm=a2c6h.13651111.0.0.3f732f70YqUgRp&file=kernel-4.9.230-37.el7.x86_64.rpm

上传kernel-4.9.230-37.el7.x86_64.rpm到各节点。

升级内核:

# yum install kernel-4.9.230-37.el7.x86_64.rpm -y

查看可用启动内核:

# cat /boot/grub2/grub.cfg |grep menuentry

menuentry 'CentOS Linux (4.9.230-37.el7.x86_64) 7 (Core)' --class centos --class gnu-linux --class gnu --class os --unrestricted ...

menuentry 'CentOS Linux (3.10.0-1160.el7.x86_64) 7 (Core)' --class centos --class gnu-linux --class gnu --class os --unrestricted ...

修改默认启动内核:

# grub2-set-default 'CentOS Linux (4.9.230-37.el7.x86_64) 7 (Core)'

重启系统:

# reboot

查看内核版本:

# uname -r

4.9.230-37.el7.x86_64问题2:kube-proxy提示conntrack错误

# systemctl status kube-proxy -l

Oct 05 20:50:39 k8s-node1 kube-proxy[4072]: E1005 20:50:39.865332 4072 proxier.go:1647] Failed to delete stale service IP 172.16.0.100 connections, error: error deleting connection tracking state for UDP service IP: 172.16.0.100, error: error looking for path of conntrack: exec: "conntrack": executable file not found in $PATH

解决办法:

yum -y install conntrack

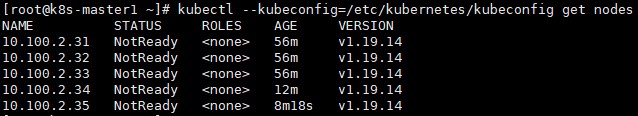

重启kube-proxy。4>. 在master上通过kubectl验证node信息

在node的kubelet和kube-proxy服务正常启动之后,会将本node自动注册到master上,然后就可以到master主机上通过kubectl查询自动注册到Kubernetes集群的node信息了。

由于master开启了https认证,所以Kubectl需要使用客户端CA证书连接master。可以使用之前创建的kubeconfig文件访问:

kubectl --kubeconfig=/etc/kubernetes/kubeconfig get nodes

可以看到status状态为notready,这是因为还没有部署CNI网络插件。

8、部署CNI网络插件

部署网络插件类似于kubeadm创建kubernetes集群,例如部署Calico CNI插件:

1>. 在线部署:

# kubectl apply -f "https://docs.projectcalico.org/manifests/calico.yaml"2>. 离线部署:

1) 配置k8s访问harbor私有仓库:

K8S在默认情况下只能拉取Harbor仓库中的公有镜像,拉取私有镜像会报错:ErrImagePull 或 ImagePullBackOff。

出现这种问题,一般有两种解决办法:

<1>. 到 Harbor 仓库中把该镜像的项目设置成公开权限(一般不采纳)

<2>. 创建认证登录秘钥,在拉取镜像时指定该秘钥

创建秘钥:

<1>. 先在服务器上登录 Harbor 仓库:

登录Harbor

# docker login -u admin -p Harbor12345 http://reg.k8stest.com

...

Login Succeeded <2>. 查看登录的秘钥数据:

登录成功后会在当前用户下生成 .docker/config.json 文件

# cat ~/.docker/config.json

{

"auths": {

"reg.k8stest.com": {

"auth": "YWRtaW46SGFyYm9yMTIzNDU="

}

}

}对 config.json 进行base64加密

# cat ~/.docker/config.json |base64 -w 0

ewoJImF1dGhzIjogewoJCSJyZWcuazhzdGVzdC5jb20iOiB7CgkJCSJhdXRoIjogIllXUnRhVzQ2U0dGeVltOXlNVEl6TkRVPSIKCQl9Cgl9Cn0=<3>. 创建secret对象:

编写 secret.yaml 文件:

# vim secret-harbor.yaml

apiVersion: v1

kind: Secret

metadata:

name: harborlogin

type: kubernetes.io/dockerconfigjson

data:

.dockerconfigjson: ewoJImFxxx...

其中 dockerconfigjson 值是第二步生成的结果。

创建secret对象:

# kubectl apply -f secret-harbor.yaml

secret/harborlogin created

删除secret对象:

# kubectl delete secrets harborlogin

或者:使用命令行直接创建secret对象:

# kubectl create secret docker-registry harborlogin \

--docker-server=reg.k8stest.com \

--docker-username='admin' \

--docker-password='Harbor12345'

secret/harborlogin created

查看秘钥:

# kubectl get secret

NAME TYPE DATA AGE

default-token-x8szs kubernetes.io/service-account-token 3 5d

harborlogin kubernetes.io/dockerconfigjson 1 25s

查看私钥内容:

# kubectl get secret harborlogin --output=yaml

apiVersion: v1

data:

.dockerconfigjson: eyJhdXRocyI6eyJyZWcuazhzdGVzdC5jb20iOnsidXNlcm5hbWUiOiJhZG1pbiIsInBhc3N3b3JkIjoiSGFyYm9yMTIzNDUiLCJhdXRoIjoiWVdSdGFXNDZTR0Z5WW05eU1USXpORFU9In19fQ==

kind: Secret

metadata:

creationTimestamp: "2021-09-17T15:03:46Z"

managedFields:

- apiVersion: v1

fieldsType: FieldsV1

fieldsV1:

f:data:

.: {}

f:.dockerconfigjson: {}

f:type: {}

manager: kubectl-create

operation: Update

time: "2021-09-17T15:03:46Z"

name: harborlogin

namespace: default

resourceVersion: "57527"

selfLink: /api/v1/namespaces/default/secrets/harborlogin

uid: fca0334d-eecb-4493-b82c-799caee2cb27

type: kubernetes.io/dockerconfigjson

注:未指定namespace,默认是default。<4>. 创建应用,拉取私有镜像:

yaml文件中注明imagePullSecrets属性

...

spec:

imagePullSecrets:

- name: harborlogin

containers:

- name: xxx

image: reg.k8stest.com/testproj/kube-controllers:v3.20.0

...2) 检查部署所需的镜像:

# wget https://docs.projectcalico.org/manifests/calico.yaml

# cat calico.yaml |grep -i image

image: docker.io/calico/cni:v3.20.0

image: docker.io/calico/pod2daemon-flexvol:v3.20.0

image: docker.io/calico/node:v3.20.0

image: docker.io/calico/kube-controllers:v3.20.0

拉取calico镜像:

内网环境可以从外网机器上拉取:

docker pull docker.io/calico/cni:v3.20.0

docker pull docker.io/calico/pod2daemon-flexvol:v3.20.0

docker pull docker.io/calico/node:v3.20.0

docker pull docker.io/calico/kube-controllers:v3.20.0

上传镜像到私有仓库:

docker tag calico/cni:v3.20.0 reg.k8stest.com/testproj/cni:v3.20.0

docker tag calico/node:v3.20.0 reg.k8stest.com/testproj/node:v3.20.0

docker tag calico/pod2daemon-flexvol:v3.20.0 reg.k8stest.com/testproj/pod2daemon-flexvol:v3.20.0

docker tag calico/kube-controllers:v3.20.0 reg.k8stest.com/testproj/kube-controllers:v3.20.0

docker login -u admin -p Harbor12345 http://reg.k8stest.com

docker push reg.k8stest.com/testproj/cni:v3.20.0

docker push reg.k8stest.com/testproj/node:v3.20.0

docker push reg.k8stest.com/testproj/pod2daemon-flexvol:v3.20.0

docker push reg.k8stest.com/testproj/kube-controllers:v3.20.0

注:kubernetes对于创建pod需要使用一个名为"pause"的镜像,tag名为"k8s.gcr.io/pause:3.2",默认从镜像库k8s.gcr.io下载。

在私有云环境中可以将其上传到私有镜像库,并修改kubelet的启动参数 --pod-infra-container-image,将其设置为使用镜像库的镜像名称。

从外网环境下载pause和coredns镜像(部署dns服务时使用),打包上传到harbor镜像库。

docker image load < pause3.2.tar

docker image load < coredns1.7.0.tar

docker tag k8s.gcr.io/pause:3.2 reg.k8stest.com/testproj/pause:v3.2

docker tag k8s.gcr.io/coredns:1.7.0 reg.k8stest.com/testproj/coredns:v1.7.0

docker push reg.k8stest.com/testproj/pause:v3.2

docker push reg.k8stest.com/testproj/coredns:v1.7.03) 部署calico网络插件

修改calico.yaml镜像地址:

sed -i 's/docker.io\/calico/reg.k8stest.com\/testproj/g' calico.yaml

kubectl --kubeconfig=/etc/kubernetes/kubeconfig apply -f calico.yaml

查看部署状态:

kubectl --kubeconfig=/etc/kubernetes/kubeconfig get pods --all-namespaces -w

注意:

执行kubectl apply -f calico.yaml命令时,需要指定kubeconfig文件,否则会报以下错误:

The connection to the server localhost:8080 was refused - did you specify the right host or port?

可以把配置文件配置为环境变量:

echo "export KUBECONFIG=/etc/kubernetes/kubeconfig" >> ~/.bash_profile

source ~/.bash_profile

在所有节点上都需要配置环境变量。

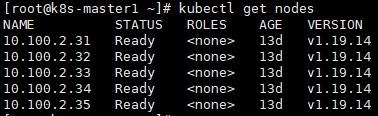

部署完成后,查看nodes:

# kubectl get nodes

Nodes状态已经变为Ready了。

4) 安装calicoctl

下载地址:https://github.com/projectcalico/calicoctl/releases/download/v3.20.0/calicoctl

cp calicoctl /usr/local/bin/

chmod +x /usr/local/bin/calicoctl

# calicoctl version

Client Version: v3.20.0

Git commit: 38b00edd

Cluster Version: v3.20.0

Cluster Type: k8s,bgp,kdd

# calicoctl node status

Calico process is running.

IPv4 BGP status

+--------------+-------------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+--------------+-------------------+-------+----------+-------------+

| 10.100.2.32 | node-to-node mesh | up | 15:22:06 | Established |

| 10.100.2.33 | node-to-node mesh | up | 15:22:06 | Established |

| 10.100.2.34 | node-to-node mesh | up | 15:22:06 | Established |

| 10.100.2.35 | node-to-node mesh | up | 15:22:06 | Established |

+--------------+-------------------+-------+----------+-------------+

IPv6 BGP status

No IPv6 peers found.

拷贝到其他master节点:

scp /usr/local/bin/calicoctl k8s-master2:/usr/local/bin/

scp /usr/local/bin/calicoctl k8s-master3:/usr/local/bin/

在其他master节点执行 calicoctl node status 检查calico的state是否up,info是否Established。3>. 遇到的问题:

# kubectl get pods -n kube-system -o wide|grep calico

calico-kube-controllers-84b486467c-zxtpq 1/1 Running 4 16d 192.168.162.3 10.100.2.34 <none> <none>

calico-node-f8hg4 1/1 Running 2 16d 10.100.2.34 10.100.2.34 <none> <none>

calico-node-jxhdg 1/1 Running 3 16d 10.100.2.32 10.100.2.32 <none> <none>

calico-node-mk665 1/1 Running 3 16d 10.100.2.31 10.100.2.31 <none> <none>

calico-node-mtfhd 0/1 Running 0 3m33s 10.100.2.33 10.100.2.33 <none> <none>

calico-node-tkd6g 1/1 Running 2 16d 10.100.2.35 10.100.2.35 <none> <none>

可以看到pod(calico-node-mtfhd)的ready为0。

# kubectl get pod calico-node-mtfhd -n kube-system -o yaml | kubectl replace --force -f -

pod "calico-node-mtfhd" deleted

pod/calico-node-mtfhd replaced

删除重建pod。

# kubectl get pods -n kube-system -o wide|grep calico

calico-kube-controllers-84b486467c-zxtpq 1/1 Running 4 16d 192.168.162.3 10.100.2.34 <none> <none>

calico-node-6cbxz 0/1 Running 0 2m58s 10.100.2.33 10.100.2.33 <none> <none>

calico-node-f8hg4 1/1 Running 2 16d 10.100.2.34 10.100.2.34 <none> <none>

calico-node-jxhdg 1/1 Running 3 16d 10.100.2.32 10.100.2.32 <none> <none>

calico-node-mk665 1/1 Running 3 16d 10.100.2.31 10.100.2.31 <none> <none>

calico-node-tkd6g 1/1 Running 2 16d 10.100.2.35 10.100.2.35 <none> <none>

ready状态还是0。

# kubectl describe pod calico-node-6cbxz -n kube-system

Warning Unhealthy 6m54s kubelet Readiness probe failed: 2021-10-04 13:39:08.347 [INFO][141] confd/health.go 180: Number of node(s) with BGP peering established = 0

calico/node is not ready: BIRD is not ready: BGP not established with 10.100.2.31,10.100.2.32,10.100.2.34,10.100.2.35

Warning Unhealthy 6m44s kubelet Readiness probe failed: 2021-10-04 13:39:18.352 [INFO][171] confd/health.go 180: Number of node(s) with BGP peering established = 0

calico/node is not ready: BIRD is not ready: BGP not established with 10.100.2.31,10.100.2.32,10.100.2.34,10.100.2.35

Warning Unhealthy 114s (x22 over 5m24s) kubelet (combined from similar events): Readiness probe failed: 2021-10-04 13:44:08.354 [INFO][1021] confd/health.go 180: Number of node(s) with BGP peering established = 0

calico/node is not ready: BIRD is not ready: BGP not established with 10.100.2.31,10.100.2.32,10.100.2.34,10.100.2.35

原因:

k8s-master3搭建了harbor镜像仓库,生成了多个IP地址。calico选择了bridge网卡,导致网络异常。

解决办法:

# vim calico.yaml

- name: CALICO_IPV4POOL_CIDR

value: "192.168.0.0/16"

- name: IP_AUTODETECTION_METHOD

value: "interface=ens.*"

# kubectl apply -f calico.yaml

参考:https://blog.csdn.net/samginshen/article/details/1054246529、部署DNS插件

1>. 修改每个Node上kubelet的启动参数,在其中加上以下两个参数:

--cluster-dns=172.16.0.100:为DNS服务的ClusterIP地址

--cluster-domain=cluster.local:为在DNS服务中设置的域名

vim /etc/kubernetes/kubelet

KUBELET_ARGS="--kubeconfig=/etc/kubernetes/kubeconfig \

--config=/etc/kubernetes/kubelet.config \

--pod-infra-container-image=reg.k8stest.com/testproj/pause:v3.2 \

--hostname-override=10.100.2.31 \

--network-plugin=cni \

--cluster-dns=172.16.0.100 \

--cluster-domain=cluster.local \

--logtostderr=false --log-dir=/var/log/kubernetes --v=0"

重启kubelet服务

systemctl restart kubelet2>. 创建CoreDNS资源对象:

注:可使用官方模板进行修改:https://github.com/coredns/deployment/blob/master/kubernetes/coredns.yaml.sed

1) 创建RBAC对象yaml文件(启用了RBAC的集群):

# vim coredns-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: Reconcile

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: EnsureExists

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system2) 创建ConfigMap对象yaml文件:

# vim coredns-ConfigMap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: EnsureExists

data:

Corefile: |

cluster.local {

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local 172.16.0.0/16 {

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

forward . /etc/resolv.conf

cache 30

loop

reload

loadbalance

}

. {

cache 30

loadbalance

forward . /etc/resolv.conf

}3) 创建Deployment对象yaml文件:

# vim coredns-Deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/name: "CoreDNS"

spec:

replicas: 3

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

nodeSelector:

kubernetes.io/os: linux

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: k8s-app

operator: In

values: ["kube-dns"]

topologyKey: kubernetes.io/hostname

imagePullSecrets:

- name: harborlogin

containers:

- name: coredns

image: reg.k8stest.com/testproj/coredns:v1.7.0

imagePullPolicy: IfNotPresent

#resources:

# limits:

# memory: 170Mi

# requests:

# cpu: 100m

# memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /ready

port: 8181

scheme: HTTP

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile4) 创建Service对象yaml文件:

# vim coredns-Service.yaml

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 172.16.0.100

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

- name: metrics

port: 9153

protocol: TCP3>. 创建CoreDNS服务

# kubectl apply -f coredns-rbac.yaml

serviceaccount/coredns created

clusterrole.rbac.authorization.k8s.io/system:coredns created

clusterrolebinding.rbac.authorization.k8s.io/system:coredns created

# kubectl apply -f coredns-ConfigMap.yaml

configmap/coredns created

# kubectl apply -f coredns-Deployment.yaml

deployment.apps/coredns created

注:默认coredns副本数为1,在生产环境可以部署多个,例如:修改副本数:replicas: 3,再执行 kubectl apply -f coredns-Deployment.yaml

# kubectl apply -f coredns-Service.yaml

service/kube-dns created查看服务是否创建成功:

# kubectl get all -n kube-system -o wide

或者分别查看:

# kubectl get deploy -n kube-system

NAME READY UP-TO-DATE AVAILABLE AGE

calico-kube-controllers 1/1 1 1 20d

coredns 3/3 3 3 17h

# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-84b486467c-zxtpq 1/1 Running 14 17d

calico-node-7fnh2 1/1 Running 15 19h

calico-node-f948v 1/1 Running 5 19h

calico-node-lpmbj 1/1 Running 11 20h

calico-node-pg85l 1/1 Running 10 20h

calico-node-rx6sb 1/1 Running 7 20h

coredns-5c69fdfff5-2jql2 1/1 Running 4 17h

coredns-5c69fdfff5-46g9r 1/1 Running 4 17h

coredns-5c69fdfff5-qx9x7 1/1 Running 4 17h

# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 172.16.0.100 <none> 53/UDP,53/TCP,9153/TCP 8m10s查看kube-dns服务详细信息:

# kubectl describe svc kube-dns -n kube-system

Name: kube-dns

Namespace: kube-system

Labels: k8s-app=kube-dns

kubernetes.io/cluster-service=true

kubernetes.io/name=CoreDNS

Annotations: prometheus.io/port: 9153

prometheus.io/scrape: true

Selector: k8s-app=kube-dns

Type: ClusterIP

IP: 172.16.0.100

Port: dns 53/UDP

TargetPort: 53/UDP

Endpoints: 192.168.139.71:53,192.168.254.19:53,192.168.72.203:53

Port: dns-tcp 53/TCP

TargetPort: 53/TCP

Endpoints: 192.168.139.71:53,192.168.254.19:53,192.168.72.203:53

Port: metrics 9153/TCP

TargetPort: 9153/TCP

Endpoints: 192.168.139.71:9153,192.168.254.19:9153,192.168.72.203:9153

Session Affinity: None

Events: <none>4>. 验证DNS服务:

下载busybox镜像:

# docker pull busybox:1.28.4

# docker tag busybox:1.28.4 reg.k8stest.com/testproj/busybox:v1.28.4

# docker push reg.k8stest.com/testproj/busybox:v1.28.4 创建nslookup服务:

# vim busybox.yaml

apiVersion: v1

kind: Pod

metadata:

name: busybox

namespace: default

spec:

imagePullSecrets:

- name: harborlogin

containers:

- name: busybox

image: reg.k8stest.com/testproj/busybox:v1.28.4

command:

- sleep

- "3600"

imagePullPolicy: IfNotPresent

restartPolicy: Always

# kubectl apply -f busybox.yaml

# kubectl get pods busybox

NAME READY STATUS RESTARTS AGE

busybox 1/1 Running 23 17d

# kubectl exec busybox -- cat /etc/resolv.conf

nameserver 172.16.0.100

search default.svc.cluster.local svc.cluster.local cluster.local

options ndots:5DNS解析遇到的问题:DNS解析失败

# kubectl exec busybox -- nslookup kubernetes.default

;; connection timed out; no servers could be reached

command terminated with exit code 1

# kubectl exec -it busybox /bin/sh

/ # nslookup www.baidu.com

Server: 172.16.0.100

Address: 172.16.0.100:53

Non-authoritative answer:

www.baidu.com canonical name = www.a.shifen.com

*** Can‘t find www.baidu.com: No answer

/ # nslookup kubernetes.default

;; connection timed out; no servers could be reached

/ # ping 172.16.0.100

PING 172.16.0.100 (172.16.0.100): 56 data bytes

64 bytes from 172.16.0.100: seq=0 ttl=64 time=0.100 ms

64 bytes from 172.16.0.100: seq=1 ttl=64 time=0.063 ms

/ # ping www.baidu.com

PING www.baidu.com (112.80.248.76): 56 data bytes

64 bytes from 112.80.248.76: seq=0 ttl=55 time=19.811 ms

64 bytes from 112.80.248.76: seq=1 ttl=55 time=19.690 ms

注:能ping通是由上级dns解析

网上搜索说是镜像问题(1.33.1),换成 busybox:1.28.4 测试正常。

# kubectl exec busybox -- nslookup kubernetes.default

Server: 172.16.0.100

Address 1: 172.16.0.100 172.16.0.100

Name: kubernetes.default

Address 1: 172.16.0.1最后更新于 2021-10-08 20:55:16 并被添加「k8s」标签,已有 4913 位童鞋阅读过。

本站使用「署名 4.0 国际」创作共享协议,可自由转载、引用,但需署名作者且注明文章出处

此处评论已关闭